Input

I have the following depth images of type uint16 obtained from Intel realsense L515 camera which is supposed to have an Avg Depth Accuracy< 5mm @ 1m.

Goal

I want to quantify the depth of the blocks inside this image to get a discrete representation of the blocks inside my region of interest of 23 x 11 block positions such as

P_x1_y1 : z = 1(one block), P_x2_y2: z = 2 (two blocks), up to 5 blocks (as in the image center).

The ROI RGB Image can clarify my aim (but it is not used as an input):

What I have tried so far:

- Obtaining the ROI:

#!/usr/bin/python3

# -*- coding: utf-8 -*-

import numpy as np

import cv2

import matplotlib.pyplot as plt

def get_roi(d1, output_size=(736, 384), ratio=(0.77, 0.54), shift=(0, 80), verbose=False):

"""

Function: get_roi, to find and resize the ROI.

---

Parameters:

@param: d1, nd array, depth image.

@param: output_size, tuple, the output ROI size.

@param: ratio, tuple, the ratio of the ROI to the detected zone.

@param: shift, tuple, the shift in pixles to align the ROI.

@param: verbose, bool, to vizualize the result.

---

@return: roi, nd array, ROI resized.

"""

d = d1.copy()

th = cv2.threshold(d, 0, 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU)[1]

th = th.astype(np.uint8)

contours = cv2.findContours(th, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE )[0]

cnt_thresh = 10000

fx, fy = ratio

x_shift, y_shift = shift

for i, cnt in enumerate(contours):

if(cv2.contourArea(cnt)>cnt_thresh):

x,y,w,h = cv2.boundingRect(cnt)

cx = x + w//2 + x_shift

cy = y + h//2 + y_shift

nw = int(fx * w)

nh = int(fy * h)

# cv2.rectangle(d1,(cx-nw//2,cy-nh//2),(cx+nw//2,cy+nh//2),color=0)

d_roi = d1[cy-nh//2:cy+nh//2,cx-nw//2:cx+nw//2]

roi = cv2.resize(d_roi, output_size)

# Visualize results

if(verbose):

plt.imshow(roi)

plt.show()

return roi

- Finding the mode(most frequent) non-zero value of each cell in the grid:

def mode(arr):

"""

Function: mode, to find the mode of an array.

---

Parameters:

@param: arr, nd array, any.

---

@return: the mode value (whatever int/float/etc) of this array.

"""

vals,counts = np.unique(arr, return_counts=True)

if 0 in vals:

z_idx = np.where(vals == 0)

vals = np.delete(vals, z_idx)

counts = np.delete(counts, z_idx)

index = np.argmax(counts)

return vals[index]

- Quantifying the values of each cell:

def mapVal(val):

"""

Function: mapVal, to map depth values.

---

Parameters:

@param: val, int, any.

---

@return: int val, specific value 0, 50, 150, 200, 250, val.

"""

if val<=183:

return 0

if val>183 and val <=230:

return 50

if val>230 and val <=295:

return 100

if val>295 and val <=390:

return 150

if val>390 and val <=470:

return 200

if val>470:

return 250

else:

return val

- grid the ROI into cells, and applying Linear correction for the depth static error:

def gridWorkspace(roi, gridSize=(23, 11), shift=[0, 5], verbose=False):

"""

Function: gridWorkspace, to find the contours of the red markers.

---

Parameters:

@param: roi, nd array, cropped region of interest.

@param: gridSize, tuple, lenght/width or the Workspace.

@param: shift, to make static error compensation for alignment.

@param: verbose, boolean, to show the output of the function.

---

@return: None.

"""

# Store a deep copy for results:

roi_copy = roi.copy()

# Divide the image into a grid:

verticalCells = gridSize[1]

horizontalCells = gridSize[0]

# Cell dimensions

bigRectWidth = roi_copy.shape[1]

bigRectHeight = roi_copy.shape[0]

cellWidth = bigRectWidth // horizontalCells

cellHeight = bigRectHeight // verticalCells

x_shift, y_shift = shift

# # Correction values

origin = mode(roi[y_shift:cellHeight+ y_shift, x_shift:cellWidth+x_shift])

x_max = mode(roi[y_shift:y_shift+cellHeight, x_shift+(horizontalCells-1)*cellWidth:x_shift+horizontalCells*cellWidth])

y_max = mode(roi[y_shift++(verticalCells-1)*cellHeight:y_shift+verticalCells*cellHeight, x_shift:x_shift+cellWidth])

print("origin= {}, x_max= {}, y_max= {}".format(origin, x_max, y_max))

x_corr = ( int(x_max) - int(origin) ) // horizontalCells

y_corr = ( int(y_max) - int(origin) ) // verticalCells

print("x_corr = {}, y_corr = {}".format(x_corr, y_corr))

# Loop thru vertical dimension:

for j in range(verticalCells):

# Cell starting y position:

yo = j * cellHeight + y_shift

# Loop thru horizontal dimension:

for i in range(horizontalCells):

# Cell starting x position:

xo = i * cellWidth + x_shift

# Cell Dimensions:

cX = int(xo)

cY = int(yo)

# Quantify current cell:

# print(i, j, mode(roi[cY:cY + cellHeight, cX:cX + cellWidth]))

roi_copy[cY:cY + cellHeight, cX:cX + cellWidth] = mapVal(mode(roi[cY:cY + cellHeight, cX:cX + cellWidth]) - j*y_corr - i*x_corr)# mapVal(371 - mode(roi[cY:cY + cellHeight, cX:cX + cellWidth]))

# Draw Cell

cv2.rectangle(roi_copy, (cX, cY), (cX + cellWidth, cY + cellHeight), (100, 100, 255), 1)

# Visualize results

if(verbose):

plt.imshow(roi_copy)

plt.show()

So when I try:

path = ""

imName = "d1.png"

d1 = cv2.imread(path+imName, -1)

roi = get_roi(d1, verbose=False)

roi = np.max(roi) - roi

roi[roi<0] = 0

roi[roi>500] = 0

gridWorkspace(roi, verbose=True)

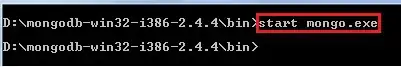

I get this result:

Can you please tell me what can I do to improve my segmentation? thanks in advance.