I've trained a mask r-cnn on corn images (I cannot show examples because they are confidential), but they are basically pictures of corn kernels scattered over a flat surface.

There are different kinds of corn kernels I want to be able to segment and classify. I understand the AP metrics are the best way of measuring the performance of an instance segmentation algorithm and I know a confusion matrix for this kind of algorithm doesn't usually make sense.

But for his specific case, where I have 4 classes of very similar objects, I would like to be able to set a fixed AP value, like AP50/AP75 and build a confusion matrix for that.

Would it be possible? How would I do it?

I used detectron2 library to train and get predictions. Here is the code I use to load my trained model from disk, generate predictions in the validation set, and visualize the results:

import detectron2

from detectron2.utils.logger import setup_logger

setup_logger()

import numpy as np

import matplotlib.pyplot as plt

import os, json, cv2, random, gc

from detectron2 import model_zoo

from detectron2.data.datasets import register_coco_instances

from detectron2.checkpoint import DetectionCheckpointer, Checkpointer

from detectron2.data import MetadataCatalog, DatasetCatalog, build_detection_test_loader

from detectron2.engine import DefaultTrainer, DefaultPredictor

from detectron2.config import get_cfg

from detectron2.utils.visualizer import Visualizer, ColorMode

from detectron2.modeling import build_model

from detectron2.evaluation import COCOEvaluator, inference_on_dataset

train_annotations_path = "./data/cvat-corn-train-coco-1.0/annotations/instances_default.json"

train_images_path = "./data/cvat-corn-train-coco-1.0/images"

validation_annotations_path = "./data/cvat-corn-validation-coco-1.0/annotations/instances_default.json"

validation_images_path = "./data/cvat-corn-validation-coco-1.0/images"

cfg = get_cfg()

cfg.merge_from_file(model_zoo.get_config_file("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml"))

cfg.DATASETS.TRAIN = ("train-corn",)

cfg.DATASETS.TEST = ("validation-corn",)

cfg.DATALOADER.NUM_WORKERS = 2

cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml") # Let training initialize from model zoo

cfg.SOLVER.IMS_PER_BATCH = 2

cfg.SOLVER.BASE_LR = 0.00025

cfg.SOLVER.MAX_ITER = 10000

cfg.SOLVER.STEPS = []

cfg.MODEL.ROI_HEADS.BATCH_SIZE_PER_IMAGE = 128

cfg.MODEL.ROI_HEADS.NUM_CLASSES = 4

cfg.OUTPUT_DIR = "./output"

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.7

register_coco_instances(

"train-corn",

{},

train_annotations_path,

train_images_path

)

register_coco_instances(

"validation-corn",

{},

validation_annotations_path,

validation_images_path

)

metadata_train = MetadataCatalog.get("train-corn")

dataset_dicts = DatasetCatalog.get("train-corn")

cfg.MODEL.WEIGHTS = os.path.join(cfg.OUTPUT_DIR, "model_final.pth")

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.7

predictor = DefaultPredictor(cfg)

predicted_images_path = os.path.abspath("./predicted/")

dataset_dicts_validation = DatasetCatalog.get("validation-corn")

for d in dataset_dicts_validation:

im = cv2.imread(d["file_name"])

outputs = predictor(im)

v = Visualizer(im[:, :, ::-1],

metadata=metadata_train,

scale=0.5,

instance_mode=ColorMode.IMAGE_BW

)

out = v.draw_instance_predictions(outputs["instances"].to("cpu"))

fig = plt.figure(frameon=False, dpi=1)

fig.set_size_inches(1024,1024)

ax = plt.Axes(fig, [0., 0., 1., 1.])

ax.set_axis_off()

fig.add_axes(ax)

ax.imshow(cv2.cvtColor(out.get_image()[:, :, ::-1], cv2.COLOR_BGR2RGB), aspect='auto')

fig.savefig(f"{predicted_images_path}/{d['file_name'].split('/')[-1]}")

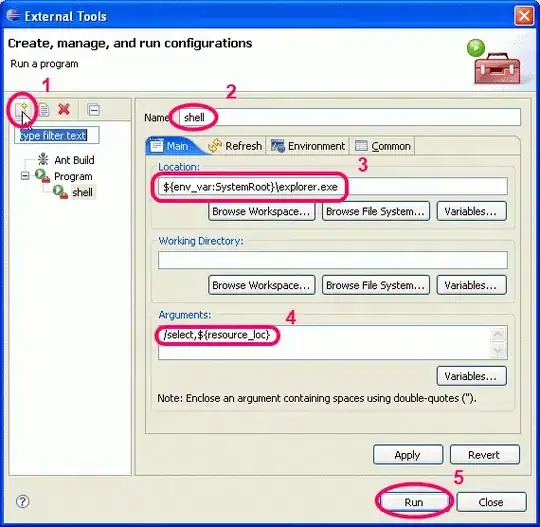

That is what my output for a given image looks like:

It is a dictionary with an Instances object as its only value, the Instances object has four lists: pred_boxes, scores, pred_classes and pred_masks. And can be visualized using the detectron2 visualizer, but I can't show the visualization for confidentiality reasons.

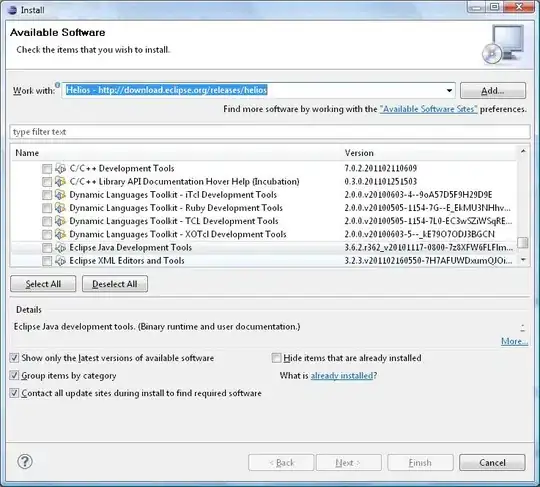

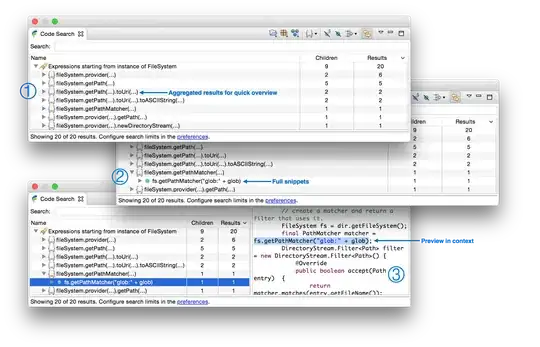

Those are the metrics I have for the model right now:

And I noticed visually that some of the kernels are being confused for other classes, specially between classes ardido and fermentado, that is why I want to somehow be able to build a confusion matrix.

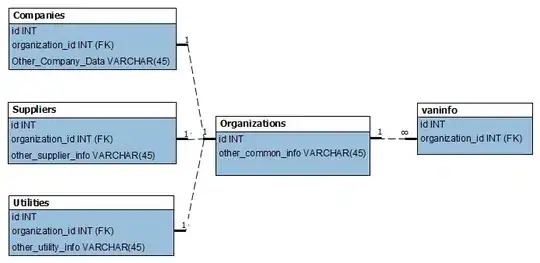

I expect the confusion matrix would look something like this:

EDIT: I found this repository:

https://github.com/kaanakan/object_detection_confusion_matrix

And tried to use it:

from confusion_matrix import ConfusionMatrix

cm = ConfusionMatrix(4, CONF_THRESHOLD=0.3, IOU_THRESHOLD=0.3)

for d in dataset_dicts_validation:

img = cv2.imread(d["file_name"])

outputs = predictor(img)

labels = list()

detections = list()

for ann in d["annotations"]:

labels.append([ann["category_id"]] + ann["bbox"])

for coord, conf, cls in zip(

outputs["instances"].get("pred_boxes").tensor.cpu().numpy(),

outputs["instances"].get("scores").cpu().numpy(),

outputs["instances"].get("pred_classes").cpu().numpy()

):

detections.append(list(coord) + [conf] + [cls])

cm.process_batch(np.array(detections), np.array(labels))

But the matrix I got is clearly wrong, and I'm having a hard time trying to fix it.