Nutshell

There is a Google Cloud PubSub topic which we publish 2M tiny/small messages to. We set up a Cloud Function to process messages coming through this topic. When inspecting the logs, we see that a lot of these messages are processed multiple times. All in all we have 150-200% the number of messages coming out that went in (see screenshot at the end).

The questions are: Why is this? And how do we properly configure things to get less duplicates?

Additional information

- Right after the 2M messages are published and the script doing so is finished, the number of messages in the subscription appears to be correct. I think we can thus rule out "publish-side" duplication, marked as

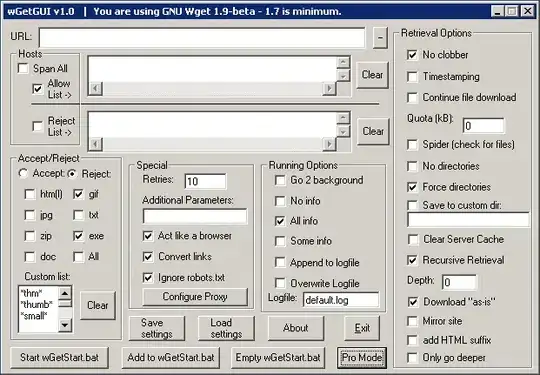

1and2in this article. - The config for the subscription is managed by Cloud Function and can not be edited by us. So it's the default/forced config for all Cloud Functions everywhere, I guess. See screenshot below.

- The most common reason cited for duplication on the "subscriber-side" is functions taking longer than the Ack Deadline to acknowledge messages (e.g. here). The Ack Deadline on the subscription is set to 600s, but the function run time tops out at a couple of seconds max.

- We also don't batch at all, which eliminates another reason often cited (e.g. in this question or this article).

- The function is limited to 90 instances.

Update 15.03.22

So I wanted to be absolutely certain this is "true" duplication, by which I mean it's PubSub delivering messages/events multiple times, instead of duplication clumsily introduced by us somewhere. So I modified our function code to form a Datastore key from our external message ID and context.messageId and write it to the Datastore with a counter. In case the key already exists the counter is incremented. Right afterwards I log the entity. Here are the stats for 458,940 executions:

|-------------------|-------------------|

| Counter | Logs |

|-------------------|-------------------|

| 1 | 208,733 |

| 2 | 101,040 |

| 3 | 62,965 |

| 4 | 37,156 |

| 5 | 20,583 |

| >5 | 28,463 |

| >15 | 20 |

| >20 | 0 |

|-------------------|-------------------|

My only (crummy) lead

The only theory I have right now is that the duplication is due to the underlying infrastructure responding with 429s because of the instance limit. See the following screenshot.

We don't really notice this anymore because we just filter the corresponding log messages out in the logging console by filtering for log_name, as the infrastructure throwing lots of warnings and errors seems to be expected behaviour. This is why I am also skeptical of this being the reason for the duplication. On the other hand it does look like the infrastructure could send NACKs in these cases.

Although it feels like the expected behaviour would be that the delivery of the original message fails with a 429 (but does not show up in our logs) and is is then re-delivered, possibly being duplicated. And this is not what we are observing, our logs do show duplicate executions.

So I am not sure this is a promising lead at all, but it's all I got right now.

Other Resources

I feel like what we are observing sounds similar to what is described in this question and the docs here, but then this is about "StreamingPull" subscriptions, whereas the GCF managed subscriptions seem to be (special?) "Push" subscriptions. I was excited at first, because it sounded so similar, but it seems it's not applicable.

Screenshots

Screenshot showing message duplication between topics:

Subscription config (managed by GCF):