I send metrics from CloudWatch to Datadog via Kinesis Firehose.

And when I send multiple values of the same metric at the same second, Datadog always preforms average. Even when I use a rollup-sum function.

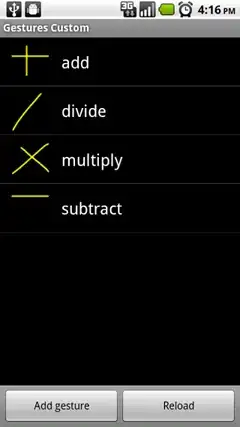

Example

I send three values for the same metric quickly one after the other in CloudWatch:

aws cloudwatch put-metric-data --namespace example --metric-name test3 --value 1

aws cloudwatch put-metric-data --namespace example --metric-name test3 --value 0

aws cloudwatch put-metric-data --namespace example --metric-name test3 --value 0

And in DataDog the value appears as 0.33 (DataDog preformed average):

Even with a rollup(sum, 300) the value is still 0.33:

What's going on? How can I force Datadog to preform a sum instead of average?