I want to measure distance between pupils within a precision of 2-3 mm. I'm considering to use a credit card held on forehead so that distance in pixels can be converted into mm. I have tried some libraries(such as opencv and dlib) but failed to achieve that precision. Does anybody have any suggestion about which library to use? Or any suggestions about how to proceed for measuring distance from an image with a reference object in it?

edit: My code is below(because of many attempts with different strategies and parameters, my code is not clean yet. Sorry for that):

# -*- coding: utf-8 -*-

import math

from imutils import face_utils

from pathlib import Path

from datetime import datetime

from components.helpers import filename_no_extension

import numpy as np

import argparse

import imutils

import dlib

import cv2

import glob

import os

import mediapipe

def get_center_of_points(landmarks: [], image_shape: ()) -> (int, int):

center_x = 0

center_y = 0

for l in landmarks:

center_x += l.x * image_shape[1]

center_y += l.y * image_shape[0]

center_x = int(center_x / len(landmarks))

center_y = int(center_y / len(landmarks))

return center_x, center_y

def get_distance_of_points(point1: (), point2: ()):

return math.sqrt(math.fabs(point1.x - point2.x) ** 2 + math.fabs(point1.y - point2.y) ** 2)

def mm_per_pixel(points: [], ref_length: float) -> float:

"""each pair of points are opposite points in the image. So (points[0] + points[1]) / 2

gives reference length in pixels"""

assert len(points) % 2 == 0

distance_in_pixels = 0

total_lines = 0

for index in range(2, len(points) - 1, 2):

print(f"Processing index: {index}")

distance_in_pixels += get_distance_of_points(points[index], points[index + 1])

p1 = dlib.point(x=int(points[index].x), y=int(points[index].y))

p2 = dlib.point(x=int(points[index + 1].x), y=int(points[index + 1].y))

cv2.line(image,

(p1.x, p1.y),

(p2.x, p2.y),

(255, 0, 0),

int(FONT_THICKNESS / 2))

total_lines += 1

mean_distance = distance_in_pixels / total_lines

print(f"Dividing distance_in_pixels with {total_lines} to find mean value")

print(f"Mean distance: {mean_distance}")

print(f"1 pixel in mm {ref_length / mean_distance}")

return ref_length / mean_distance

def mm_per_pixel_from_normalized(points: [], ref_length: float, size: ()) -> float:

"""each pair of points are opposite points in the image. So (points[0] + points[1]) / 2

gives reference length in pixels. Converts normalized points into local coords."""

assert len(points) % 2 == 0

distance_in_pixels = 0

total_lines = 0

for index in [2, 3]:

print(f"Processing index: {index}")

p1 = dlib.point(x=int(points[index].x * size[0]), y=int(points[index].y * size[1]))

p2 = dlib.point(x=int(points[index - 2].x * size[0]), y=int(points[index - 2].y * size[1]))

cv2.circle(image, (p1.x, p1.y), CIRCLE_RAD, (0, 0, 255), -1)

cv2.circle(image, (p2.x, p2.y), CIRCLE_RAD, (0, 0, 255), -1)

distance_in_pixels += get_distance_of_points(p1, p2)

total_lines += 1

mean_distance = distance_in_pixels / total_lines

print(f"Dividing distance_in_pixels with {total_lines} to find mean value")

print(f"Mean distance(iris): {mean_distance}")

print(f"1 pixel in mm(iris) {ref_length / mean_distance}")

return ref_length / mean_distance

def mm_per_pixel_from_iris(points : [], size: ()) -> float:

"""Calculates pixel distance in mm from iris horizontal side points"""

assert len(points) % 2 == 0

distance_in_pixels = 0

p1 = dlib.point(x=int(points[469].x * size[0]), y=int(points[469].y * size[1]))

p2 = dlib.point(x=int(points[471].x * size[0]), y=int(points[471].y * size[1]))

distance_in_pixels += get_distance_of_points(p1, p2)

cv2.circle(image, (p1.x, p1.y), CIRCLE_RAD, (0, 0, 255), -1)

cv2.circle(image, (p2.x, p2.y), CIRCLE_RAD, (0, 0, 255), -1)

p1 = dlib.point(x=int(points[474].x * size[0]), y=int(points[474].y * size[1]))

p2 = dlib.point(x=int(points[476].x * size[0]), y=int(points[476].y * size[1]))

distance_in_pixels += get_distance_of_points(p1, p2)

cv2.circle(image, (p1.x, p1.y), CIRCLE_RAD, (0, 0, 255), -1)

cv2.circle(image, (p2.x, p2.y), CIRCLE_RAD, (0, 0, 255), -1)

mean_distance = distance_in_pixels / 2

print(f"Dividing distance_in_pixels with {2} to find mean value")

print(f"Mean distance(iris): {mean_distance}")

print(f"1 pixel in mm(iris) {IRIS_WIDTH / mean_distance}")

return IRIS_WIDTH / mean_distance

ap = argparse.ArgumentParser()

ap.add_argument("-p", "--shape-predictor", required=True,

help="path to facial landmark predictor")

ap.add_argument("-i", "--image", required=True,

help="path to input image")

ap.add_argument("-w", "--width", type=float, required=False,

help="width of the left-most object in the image (in inches)")

args = vars(ap.parse_args())

drawingModule = mediapipe.solutions.drawing_utils

faceModule = mediapipe.solutions.face_mesh

circleDrawingSpec = drawingModule.DrawingSpec(thickness=1, circle_radius=1, color=(0, 255, 0))

lineDrawingSpec = drawingModule.DrawingSpec(thickness=1, color=(0, 255, 0))

right_eye_landmark_list = [

469, 470, 471, 472

]

left_eye_landmark_list = [

474, 475, 476, 477

]

right_cheek_landmark = [119]

left_cheek_landmark = [348]

IRIS_WIDTH = 11.7 # mm.

with faceModule.FaceMesh(static_image_mode=True,

refine_landmarks=True,

max_num_faces=1) as face:

try:

image = cv2.imread(args["image"])

height, width = image.shape[:2]

except:

print(f"Image file not found! File name: {args['image']}")

exit()

resize_width = 600

scale_factor = resize_width / width

scaled_width = int(resize_width)

scaled_height = int(height * scale_factor)

PRINT_START_POS_V = int(height / 20)

PRINT_LINE_HEIGHT = int(height / 30)

FONT_SIZE = height / 1500

FONT_THICKNESS = int(height / 400)

CIRCLE_RAD = int(height / 800)

results = face.process(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))

if results.multi_face_landmarks != None:

for faceLandmarks in results.multi_face_landmarks:

right_eye_x, right_eye_y = get_center_of_points(np.array(faceLandmarks.landmark)[right_eye_landmark_list],

image.shape[:2])

left_eye_x, left_eye_y = get_center_of_points(np.array(faceLandmarks.landmark)[left_eye_landmark_list],

image.shape[:2])

right_cheek_x, right_cheek_y = get_center_of_points(np.array(faceLandmarks.landmark)[right_cheek_landmark],

image.shape[:2])

left_cheek_x, left_cheek_y = get_center_of_points(np.array(faceLandmarks.landmark)[left_cheek_landmark],

image.shape[:2])

# point eye pupils and cheeks

cv2.circle(image, (right_eye_x, right_eye_y), 3, (255, 0, 0), -1)

cv2.circle(image, (left_eye_x, left_eye_y), 3, (255, 0, 0), -1)

cv2.circle(image, (right_cheek_x, right_cheek_y), 2, (0, 0, 255), 2)

cv2.circle(image, (left_cheek_x, left_cheek_y), 2, (0, 0, 255), 2)

p1 = dlib.point(x=int(faceLandmarks.landmark[473].x * width), y=int(faceLandmarks.landmark[473].y * height))

p2 = dlib.point(x=int(faceLandmarks.landmark[468].x * width), y=int(faceLandmarks.landmark[468].y * height))

print(f"Calculated eye center: {p1.x}, {p1.y}")

print(f"Calculated eye center: {p2.x}, {p2.y}")

print(f"Detected eye center: {right_eye_x}, {right_eye_y}")

print(f"Detected eye center: {left_eye_x}, {left_eye_y}")

cv2.circle(image, (p1.x, p1.y), CIRCLE_RAD, (0, 255, 0), -1)

cv2.circle(image, (p2.x, p2.y), CIRCLE_RAD, (0, 255, 0), -1)

if args["width"]:

cent_width = args["width"]

else:

cent_width = 53.98 # 21.50 # 0.955 # default quarter size in inch, 2.4257 centimeters

card_predictor = dlib.shape_predictor("predictor.dat")

hand_detector = dlib.simple_object_detector("detector.svm")

cards = None

print("Processing file: {}".format(args["image"]))

img = dlib.load_rgb_image(args["image"])

dets = hand_detector(img)

print("Number of hands detected: {}".format(len(dets)))

if len(dets) > 0:

rect_det = dets.pop()

cards = cards_predictor(image, rect_det)

if cards:

print(f"Number of landmarks on card: {len(cards.parts())}")

for index, p in enumerate(cards.parts()):

cv2.circle(image, (p.x, p.y), 1, (0, 0, 255), 2)

cv2.putText(image, f" {index + 1}", (cards.part(index).x, cards.part(index).y + 10),

cv2.FONT_HERSHEY_SIMPLEX, FONT_SIZE / 2, (0, 0, 255), int(FONT_THICKNESS/2))

pixel_in_mm = mm_per_pixel(cards.parts(), cent_width)

left_eye = dlib.point(x=left_eye_x, y=left_eye_y)

right_eye = dlib.point(x=right_eye_x, y=right_eye_y)

pupils_distance_pixels = get_distance_of_points(left_eye, right_eye)

pupil_distance = pixel_in_mm * pupils_distance_pixels

cv2.putText(image, f"Pupils Distance(unscaled): {format(pupil_distance, '.1f')} mm", (30, PRINT_START_POS_V),

cv2.FONT_HERSHEY_SIMPLEX, FONT_SIZE, (0, 0, 255), FONT_THICKNESS)

cv2.putText(image, f"1 Pixel in mm: {format(pixel_in_mm, '.3f')}", (30, PRINT_START_POS_V + 3 * PRINT_LINE_HEIGHT),

cv2.FONT_HERSHEY_SIMPLEX, FONT_SIZE, (0, 0, 255), FONT_THICKNESS)

else:

print("card not detected")

pixel_in_mm_iris = mm_per_pixel_from_normalized(

np.array(faceLandmarks.landmark)[right_eye_landmark_list],

IRIS_WIDTH,

size=(width, height))

pixel_in_mm_iris += mm_per_pixel_from_normalized(

np.array(faceLandmarks.landmark)[left_eye_landmark_list],

IRIS_WIDTH,

size=(width, height))

pixel_in_mm_iris /= 2

left_eye = dlib.point(x=left_eye_x, y=left_eye_y)

right_eye = dlib.point(x=right_eye_x, y=right_eye_y)

pupils_distance_pixels = get_distance_of_points(left_eye, right_eye)

pupil_distance_iris = pixel_in_mm_iris * pupils_distance_pixels

cv2.putText(image, f"Pupils Distance(from iris): {format(pupil_distance_iris, '.1f')} mm", (30, PRINT_START_POS_V + PRINT_LINE_HEIGHT),

cv2.FONT_HERSHEY_SIMPLEX, FONT_SIZE, (0, 0, 255), FONT_THICKNESS)

cv2.putText(image, f"Pupils Distance(pixels): {int(pupils_distance_pixels)}", (30, PRINT_START_POS_V + 2 * PRINT_LINE_HEIGHT),

cv2.FONT_HERSHEY_SIMPLEX, FONT_SIZE, (0, 0, 255), FONT_THICKNESS)

cv2.putText(image, f"1 Pixel in mm(from iris): {format(pixel_in_mm_iris, '.3f')}", (30, PRINT_START_POS_V + 4 * PRINT_LINE_HEIGHT),

cv2.FONT_HERSHEY_SIMPLEX, FONT_SIZE, (0, 0, 255), FONT_THICKNESS)

# show the output image with the face detections + facial landmarks

image_scaled = cv2.resize(image, (scaled_width, scaled_height))

cv2.imshow("Output", np.hstack([image_scaled, ]))

result_file_path = os.path.join(Path(__file__).parent,

Path(f"data/results/{filename_no_extension(args['image'])}--"

f"{datetime.now().strftime('%d-%m-%Y')}_unscaled.jpg"))

print(f"Saving processed file to {result_file_path}")

cv2.imwrite(result_file_path, image)

cv2.waitKey(0)

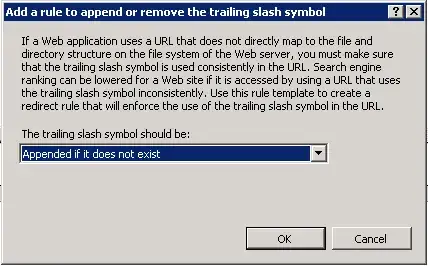

To run that code, I have trained an object detector and shape predictor from Dlib library. I have used a training set consists of 35 photos(photos were taken with two different people). To increase number of images, I have applied different tranformations like darkening, changing contrast, changing color space, scaling etc. In that way I have increased number of images to ~3000.

My strategy to measure distance is to detect a credit card held on forehead(covering key numbers of course :) ). Since credit cards have a constant size(85.60 by 53.98 mm), I thought I can convert distance in pixels to mm. Moreover by using Mediapipe library I'm making a different measurement which uses iris width as reference. So I can compare both calculations to make a kind of validation.

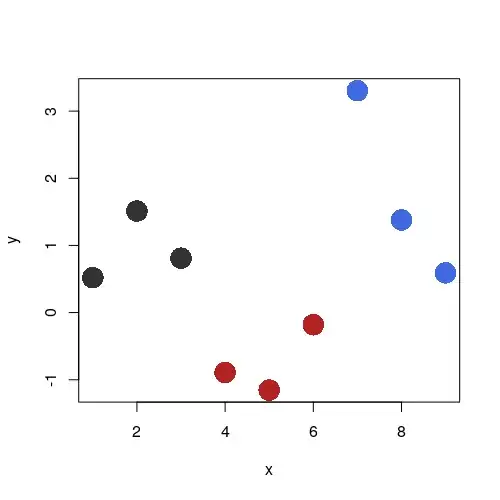

My problem with my implementation is that, my trained detector(to detect credit card) and predictor(to mark landmarks on credit card) acts quiet well with data similar to training set. However when I try to apply detection on a totally different image, it fails(smells like overfitting...). Even when I can correctly detect credit card and mark landmarks correctly, final measurement is unacceptably false. My both pupils have a distance of 63 mm betweeen. But as can be seen from images below, measurement done by using credit card as reference have an error around 15-20%. Although measurement done by using iris width as reference seems to be fine, it is not consistently precise on different images and can make error up to 15% as well.

Blue lines on card are height measurements on card. I make 3 different measurement for height of card in pixels and take their mean to reduce impact of single false measurement. Considering my pupils distance(63 mm), using mediapipe I achieved good results however other tests revealed poor results(see images below) for this reason I can't only trust calculation with Mediapipe's iris detection.

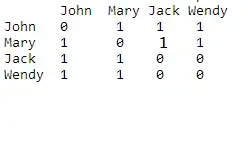

Below I'm sharing another image in which the subject is a person not contained in the training set. Since subject is totally foreign for my object detector and shape predictor, they failed to detect anything. Or when object detector found the card, it detected in a distorted way that landmarks were not properly set.

The guy on the last two images have a pupil distance of 52 mm. So pupil distance measurement from iris width failed for about 5 mm. which is higher than my expected precision(2-3 mm). Moreover object and credit card landmark detection failed as well.

Considering all these facts, I started thinking about how shall I proceed. Shall I increase my training set size and continue with same strategy or shall I use a different library or different method to measure distance between pupils? Any advice is very well appreciated. Thanks (and sorry for the long post :) )