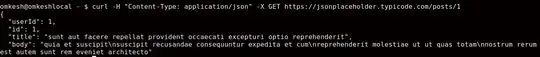

[root@bastion ~]# kubectl describe po gitlab-ui-gitaly-0 -n gitlab-system

Name: gitlab-ui-gitaly-0

Namespace: gitlab-system

Priority: 0

Node:

Labels: app=gitaly

app.kubernetes.io/component=gitaly

app.kubernetes.io/instance=gitlab-ui-gitaly

app.kubernetes.io/managed-by=gitlab-operator

app.kubernetes.io/name=gitlab-ui

app.kubernetes.io/part-of=gitlab

chart=gitaly-5.7.1

controller-revision-hash=gitlab-ui-gitaly-7f87fb98bd

heritage=Helm

release=gitlab-ui

statefulset.kubernetes.io/pod-name=gitlab-ui-gitaly-0

Annotations: checksum/config: acaaa7500c4f82921dc017dbfb173dd7ee4a44f9704b5bd0bceda31702f06d3d

gitlab.com/prometheus_port: 9236

gitlab.com/prometheus_scrape: true

openshift.io/scc: anyuid

prometheus.io/port: 9236

prometheus.io/scrape: true

Status: Pending

IP:

IPs:

Controlled By: StatefulSet/gitlab-ui-gitaly

Init Containers:

certificates:

Image: registry.gitlab.com/gitlab-org/build/cng/alpine-certificates:20191127-r2

Port:

Host Port:

Requests:

cpu: 50m

Environment:

Mounts:

/etc/ssl/certs from etc-ssl-certs (rw)

configure:

Image: registry.gitlab.com/gitlab-org/cloud-native/mirror/images/busybox:latest

Port:

Host Port:

Command:

sh

/config/configure

Requests:

cpu: 50m

Environment:

Mounts:

/config from gitaly-config (ro)

/init-config from init-gitaly-secrets (ro)

/init-secrets from gitaly-secrets (rw)

Containers:

gitaly:

Image: registry.gitlab.com/gitlab-org/build/cng/gitaly:v14.7.1

Ports: 8075/TCP, 9236/TCP

Host Ports: 0/TCP, 0/TCP

Requests:

cpu: 100m

memory: 200Mi

Liveness: exec [/scripts/healthcheck] delay=30s timeout=3s period=10s #success=1 #failure=3

Readiness: exec [/scripts/healthcheck] delay=10s timeout=3s period=10s #success=1 #failure=3

Environment:

CONFIG_TEMPLATE_DIRECTORY: /etc/gitaly/templates

CONFIG_DIRECTORY: /etc/gitaly

GITALY_CONFIG_FILE: /etc/gitaly/config.toml

SSL_CERT_DIR: /etc/ssl/certs

Mounts:

/etc/gitaly/templates from gitaly-config (rw)

/etc/gitlab-secrets from gitaly-secrets (ro)

/etc/ssl/certs/ from etc-ssl-certs (ro)

/home/git/repositories from repo-data (rw)

Conditions:

Type Status

PodScheduled False

Volumes:

repo-data:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: repo-data-gitlab-ui-gitaly-0

ReadOnly: false

gitaly-config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: gitlab-ui-gitaly

Optional: false

gitaly-secrets:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium: Memory

SizeLimit:

init-gitaly-secrets:

Type: Projected (a volume that contains injected data from multiple sources)

SecretName: gitlab-ui-gitaly-secret

SecretOptionalName:

SecretName: gitlab-ui-gitlab-shell-secret

SecretOptionalName:

etc-ssl-certs:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium: Memory

SizeLimit:

QoS Class: Burstable

Node-Selectors:

Tolerations: node.kubernetes.io/memory-pressure:NoSchedule op=Exists

node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 82m default-scheduler 0/4 nodes are available: 4 pod has unbound immediate PersistentVolumeClaims.

Warning FailedScheduling 82m default-scheduler 0/4 nodes are available: 4 pod has unbound immediate PersistentVolumeClaims.

Warning FailedScheduling 66m default-scheduler 0/4 nodes are available: 4 pod has unbound immediate PersistentVolumeClaims.

Warning FailedScheduling 65m default-scheduler 0/4 nodes are available: 4 pod has unbound immediate PersistentVolumeClaims.

Warning FailedScheduling 52m default-scheduler 0/4 nodes are available: 4 pod has unbound immediate PersistentVolumeClaims.

Warning FailedScheduling 4m44s default-scheduler 0/4 nodes are available: 4 pod has unbound immediate PersistentVolumeClaims.

Warning FailedScheduling 6s default-scheduler 0/4 nodes are available: 4 pod has unbound immediate PersistentVolumeClaims.

Warning FailedScheduling 2m1s default-scheduler 0/4 nodes are available: 4 pod has unbound immediate PersistentVolumeClaims.

Warning FailedScheduling 48s default-scheduler 0/4 nodes are available: 4 pod has unbound immediate PersistentVolumeClaims.

Asked

Active

Viewed 221 times

-1

Hassan Shamshir

- 37

- 7

1 Answers

0

I am facing the following issue due to PVC storage class and pods are showing pending status. but I am follow this link https://docs.gitlab.com/charts/installation/operator.html

Hassan Shamshir

- 37

- 7