(This is a copy post from the cv stack exchange, but just putting it here as well)

I am planning to implement nested cross-validation, but just had a question about its operation. I know there are lots of posts about nested cv, but none of them (as far as I understand) address my mis-understanding about the process.

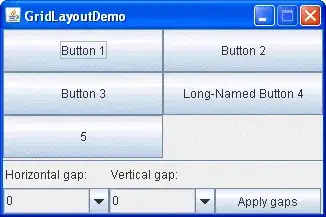

Context: I found the illustration (shown below) in the following blog to be the simplest explanation of what is going on: here.

Question: How does the outer loop work if each of the inner loop cv processes yield a different optimal set of hyperparameters?

To explain what I mean, I will refer to the image above which has 3 folds in the outer loop, which I will refer to as Fold 1, 2, and 3 respectively.

For the first iteration of the outer loop, we use Fold 1 as the holdout test set and we pass in Folds 2 & 3 for (Kfold cv) hyper parameter tuning in the inner loop. Let us say this yields a certain set of optimal hyper-parameters: hyperparameter set A. Then we train a model with all of Folds 2 & 3 as training data, using set A of hyperparams, and test on Fold 1 - we get accuracy A.

Now for the next iteration of the outer loop, use Fold 2 as the test holdout set and pass in Folds 1 & 3 to the inner loop cv process. Let us say this yields a different set of optimal hyper-parameters: hyperparameter set B. Then we train a model with all of Folds 1 & 3 as training data, using set B of hyperparams, and test on Fold 2 - we get accuracy B.

For completeness, we can repeat the above for third iteration of outer loop and obtain some new set of optimal hyper-parameters: hyperparameter set C. Then we train a model with all of Folds 1 & 2 as training data, using set C of hyperparams, and test on Fold 3 - we get accuracy C.

This is what I am confused about:

- We now have three different models/sets of hyperparameters. How has the outer loop helped us to evaluate the performance in a general setting?

- Can I simply take the average of accuracy A, B, and C? If so, what does that represent?

I hope this question makes sense. I can try to elaborate if required.