Summary : How to train a Naive Bayes Classifier on a bag of vectorized sentences ?

Example here :

X_train[0] = [[0, 1, 0, 0], [1, 0, 0, 1], [0, 0, 0, 1]]

y_train[0] = 1

X_train[1] = [[0, 0, 0, 0], [0, 1, 0, 1], [1, 0, 0, 1], [0, 1, 0, 1]]

y_train[1] = 0

.

1) Context of the project : perform sentiment analysis on batch of tweets to perform market prediction

I am working on a sentiment analysis for stock market classification. As I am new to these techniques, I tried to replicate one from this article : http://cs229.stanford.edu/proj2015/029_report.pdf

But I am facing a big issue with it. Let me explain the main steps of the article I realized :

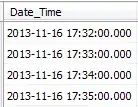

- I collected a huge amount of tweets during 4 months (7 million)

- I cleaned then (removing stop words, hashtags, mentions, punctuation, etc...)

- I grouped them into period interval of 1 hour

- I created a target that tell if the price of the Bitcoin has gone down or up after one hour (0 = down ; 1 = up)

What I need to do next is to train my Bernoulli Naive Bayes Model with this. To do this, the article mentions to vectorize the tweets this way.

What I did with the CountVectorizer class from sklearn.

2 ) The issue : the dimension of the inputs doesn't match Naive Bayes standards

But then I encounter an issue when I try to fit the Bernoulli Naive Bayes model, following the article method :

So, one observation is shaped this way :

- input shape (one observation): (nb_tweets_on_this_1hour_interval, vocabulary_size= 10 000)

one_observation_input = [

[0, 1, 0 ....., 0, 0], #Tweet 1 vectorized

....,

[1, 0, ....., 1, 0] #Tweet N vectorized

]#All of the values are 0 or 1

output shape (one observation): (1,)

one_observation_output = [0] #Can only be 0 or 1

When I tried to fit my Sklearn Bernoulli Naive Bayes model with this type of value, I am getting this error

>>> ValueError: Found array with dim 3. Estimator expected <= 2.

Indeed, the model expects binary input shaped this way :

input : (nb_features)

ex: [0, 0, 1, 0, ...., 1, 0, 1]

while I am giving it vectors of binary values !

3 ) What I have tried

So far, I tried several things to resolve this :

- Associating the label for every tweet, but the results are not good since the tweets are really noisy

- Flatten the inputs so the shape for one input is (nb_tweets_on_this_1hour_interval*vocabulary_size, ). But the model can not train as the number of tweets every hour is not constant.

4 ) Conclusion

I don't know if the error comes from my misunderstanding of the article or of the Nayes Bayes models.

How to train efficiently a naive bayes classifier on a bag of tweets ?

Here is my training code :

bnb = BernoulliNB()

uniqueY = [0, 1]#I give the algorithm the 2 classes I want to classify the tweets with. This is needed for the partial fit

for _index, row in train_df.iterrows():#I have to use a for loop to partialy fit my Bernouilli Naive Bayes classifier to prevent from out of memory issues

#row["Tweet"] contains all the (cleaned) tweets over 1hour interval this way : ["I like Bitcoin", "Nice portfolio", ...., "I am the last tweet of the interval"]

X_train = vectorizer.transform(row["Tweet"]).toarray()

#X_train contrains all of the row["Tweet"] tweets vectorizes with a bag of words algorithm which return this kind of data : [[0, 1, 0 ....., 0, 0], ....,[1, 0, ....., 1, 0]]

y_train = row["target"]

#Target is 0 if the market is going down after the tweets and 1 if it is going up

bnb.partial_fit([X_train], [y_train], uniqueY)

#Again, I use partial fit to avoid out of memory issues