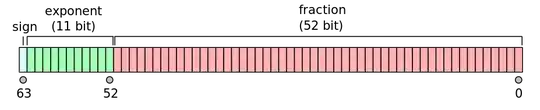

I am training a fingerprints dataset for minutiae point detection. My inputs are the rescaled (128 by 128) slap fingerprint images and Ground truth is the 12 channel minutiae points. Each channel is representing the bins of minutiae orientation while the dots are marked at x,y locations of the minutiae. Showing the input and the GT channel (3 and 8 ) as examples.

The input images are normalized between -1 and 1 due to tanh model outputs

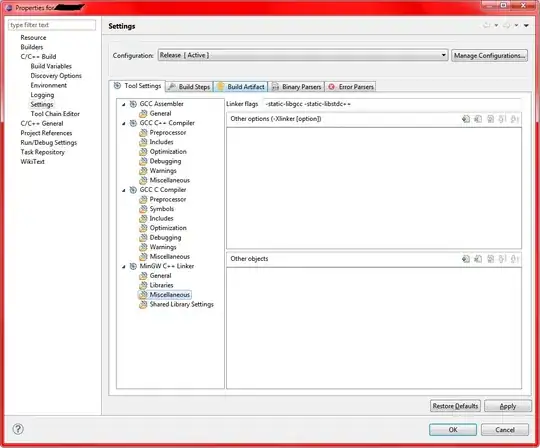

The problem is that I am receiving intermediate prediction layer outputs as shown below which are incomprehensible.

Here's my simple Autoencoder model:

import torch.nn.functional as F

class AEC(nn.Module):

def __init__(self,in_ch=3,out_ch=12):

super(AEC,self).__init__()

print('in_ch=',in_ch)

print('out_ch=',out_ch)

self.conv1 = nn.Conv2d(in_ch, 16, 2, stride=2)

self.conv2 = nn.Conv2d(16, 32, 2, stride=2)

self.conv3 = nn.Conv2d(32, 64, 2, stride=2)

self.conv4 = nn.Conv2d(64, 128, 2, stride=2)

#Decoder

self.t_conv1 = nn.ConvTranspose2d(128, 64, 2, stride=2)

self.t_conv2 = nn.ConvTranspose2d(64, 32, 2, stride=2)

self.t_conv3 = nn.ConvTranspose2d(32, 16, 2, stride=2)

self.t_conv4 = nn.ConvTranspose2d(16, out_ch, 2, stride=2)

def forward(self, x):

x = F.relu(self.conv1(x))

x = F.relu(self.conv2(x))

x = F.relu(self.conv3(x))

x = F.relu(self.conv4(x))

x = F.relu(self.t_conv1(x))

x = F.relu(self.t_conv2(x))

x = F.relu(self.t_conv3(x))

x = F.tanh(self.t_conv4(x))

return x

Code for training and viewing the intermediate tensorboard outputs:

for i, data in tqdm(enumerate(train_loader), total=len(train_loader)):

ite_num = ite_num + 1

ite_num4val = ite_num4val + 1

inputs, minmap = data['image'], data['minmap']

inputs = inputs.type(torch.FloatTensor)

minmap = minmap.type(torch.FloatTensor)

# wrap them in Variable

if torch.cuda.is_available():

inputs_v, minmap = Variable(inputs.cuda(), requires_grad=False), Variable(minmap.cuda(), requires_grad=False)

else:

inputs_v, minmap = Variable(inputs, requires_grad=False), Variable(minmap, requires_grad=False)

optimizer_min.zero_grad()

d0_min = min_net(inputs_v)

# calculate loss

min_each_loss = l2_loss_fusion(d0_min, minmap)

min_each_loss.backward()

optimizer_min.step()

running_loss_min += min_each_loss.data.item()

if(ite_num % save_tensorboard==0):

writer.add_scalars('Loss_Statistics',{'Loss_total_min': min_each_loss.data,},

global_step=ite_num)

grid_image = make_grid(inputs_v[0].clone().cpu().data, 1, normalize=True)

writer.add_image('input', grid_image, ite_num)

res = d0_min[0].clone()

res = res.sigmoid().data.cpu().numpy()[3,:,:]

res = (res - res.min()) / (res.max() - res.min() + 1e-8)

writer.add_image('Pred_layer_min_channel3', torch.tensor(res), ite_num, dataformats='HW')

res = d0_min[0].clone()

res = res.sigmoid().data.cpu().numpy()[8,:,:]

res = (res - res.min()) / (res.max() - res.min() + 1e-8)

writer.add_image('Pred_layer_min_channel8', torch.tensor(res), ite_num, dataformats='HW')

res = minmap[0].clone()

res = res.sigmoid().data.cpu().numpy()[3,:,:]

res = (res - res.min()) / (res.max() - res.min() + 1e-8)

writer.add_image('Ori_layer_min_channel3', torch.tensor(res), ite_num, dataformats='HW')

res = minmap[0].clone()

res = res.sigmoid().data.cpu().numpy()[8,:,:]

res = (res - res.min()) / (res.max() - res.min() + 1e-8)

writer.add_image('Ori_layer_min_channel8', torch.tensor(res), ite_num, dataformats='HW')

del d0_min, min_each_loss

if ite_num % save_frq == 0:

torch.save(min_net.state_dict(), model_dir + model_name+"_min_bce_itr_%d_train_tar_%3f.pth" % (ite_num, running_loss_min / ite_num4val))

running_loss_min = 0.0

min_net.train() # resume train

ite_num4val = 0

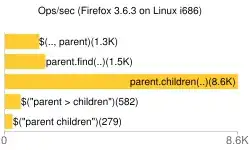

My model loss curve after 8k iterations on epoch 1

Please help me where I am going wrong. Is there something wrong with how I am visualizing the outputs on tensorboard?