I am training a deep model for MRI segmentation. The models I am using are U-Net++ and UNet3+. However, when plotting the validation and training losses of these models over time, I find that they all end with a sudden drop in loss, and a permanent plateau. Any ideas for what could be causing this plateau? or any ideas for how I could surpass it?

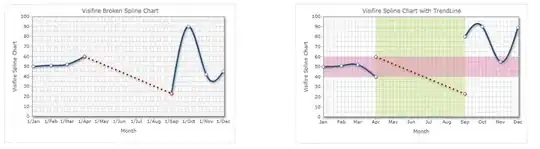

Here are the plots for the training and validation loss curves, and the corresponding segmentation performance (dice score) on the validation set. The drop in loss occurs at around epoch 80 and is pretty obvious in the graphs.

In regard to the things I've tried:

- Perhaps a local minima is being found, which is hard to escape, so I tried resuming training at epoch 250 with the learning rate increased by a factor of 10, but the plateau stays the exact same regardless of how many epochs I keep training. I also tried resuming with a reduced LR of factor 10 and 100 and no change either.

- Perhaps the model has too many parameters, i.e. the plateau is happening due to over-fitting. So I tried training models that have fewer parameters. This changed the actual loss value (Y-axis value) that the plateau ends up occurring at, but the same general shape of a sudden drop and plateau remains the same. I also tried increasing the parameters (because it was easy to do), and the same problem is observed.

Any ideas for what could be causing this plateau? or any ideas for how I could surpass it?