I'm experimenting with compression algorithms and encountered an issue whereby my decoding algorithm would give the expected result where the encoded input was short, but after it reached a certain level of complexity it would start returning garbage.

Through some debugging steps I identified that the issue was caused by keeping track of the current node in my tree traversal algorithm in a "normal" variable:

auto currentNode = root;

After changing this to keep track of the current node in the tree using pointers the issue was resolved:

const TreeNode* currentNode = &root;

Alternatively, replacing:

currentNode = currentNode.children[bit];

with:

auto cache = currentNode.children[bit];

currentNode = cache;

also resolved the issue.

What I wasn't able to do (even with some support from someone else) was identify what the cause of the undefined behaviour was. Everything indicates it's something to do with the assignment here:

currentNode = currentNode.children[bit];

but that's all we could find.

What's the reason behind the undefined behaviour?

Code:

#include <vector>

#include <string>

#include <iostream>

struct TreeNode {

char symbol; // Only relevant to leaf nodes

std::vector<TreeNode> children; // Leaf nodes have 0 children, all other nodes have exactly 2

TreeNode(unsigned char symbol) : symbol(symbol), children({}) { }

TreeNode(unsigned char symbol, TreeNode left, TreeNode right) : symbol(symbol), children({ left, right }) { }

};

/// <summary>

/// Decodes provided `input` data up to `size` using the tree rooted at `root` to decode

/// </summary>

/// <param name="input">Encoded data</param>

/// <param name="root">Root node of decoding tree</param>

/// <param name="size">Size of unencoded data</param>

/// <returns>Unencoded data</returns>

std::vector<unsigned char> DecodeWithVars(const std::vector<unsigned char>& input, const TreeNode& root, int size) {

std::vector<unsigned char> output = {};

auto currentNode = root;

for (auto& c : input) {

for (int i = 0; i <= 7; i++) {

int bit = (c >> (7 - i)) & 1; // Iterating over each bit of each character in `input`

currentNode = currentNode.children[bit];

if (currentNode.children.size() == 0) {

output.push_back(currentNode.symbol);

currentNode = root;

if (output.size() == size) {

return output;

}

}

}

}

return output;

}

/// <summary>

/// Decodes provided `input` data up to `size` using the tree rooted at `root` to decode

/// Different from DecodeWithVars in that it uses a pointer to keep track of current tree node

/// </summary>

/// <param name="input">Encoded data</param>

/// <param name="root">Root node of decoding tree</param>

/// <param name="size">Size of unencoded data</param>

/// <returns>Unencoded data</returns>

std::vector<unsigned char> DecodeWithPointers(const std::vector<unsigned char>& input, const TreeNode& root, int size) {

std::vector<unsigned char> output = {};

const TreeNode* currentNode = &root;

for (auto& c : input) {

for (int i = 0; i <= 7; i++) {

int bit = (c >> (7 - i)) & 1; // Iterating over each bit of each character in `input`

currentNode = &(*currentNode).children[bit];

if ((*currentNode).children.size() == 0) {

output.push_back((*currentNode).symbol);

currentNode = &root;

if (output.size() == size) {

return output;

}

}

}

}

return output;

}

int main()

{

std::string unencodedText = "AAAAAAAAAAAAAAABBBBBBBC,.,.,.,.,.,.CCCCCDDDDDDEEEEE";

std::vector<unsigned char> data = { 0,0,0,1,36,146,78,235,174,186,235,155,109,201,36,159,255,192 };

TreeNode tree = TreeNode('*',

TreeNode('*',

TreeNode('A'),

TreeNode('*',

TreeNode('B'),

TreeNode('C')

)

),

TreeNode('*',

TreeNode('*',

TreeNode('D'),

TreeNode(',')

),

TreeNode('*',

TreeNode('.'),

TreeNode('E')

)

)

);

auto decodedFromPointers = DecodeWithPointers(data, tree, unencodedText.size());

std::string strFromPointers(decodedFromPointers.begin(), decodedFromPointers.end());

auto decodedFromVars = DecodeWithVars(data, tree, unencodedText.size());

std::string strFromVars(decodedFromVars.begin(), decodedFromVars.end());

std::cout << strFromPointers << "\n";

std::cout << strFromVars << "\n";

return 0;

}

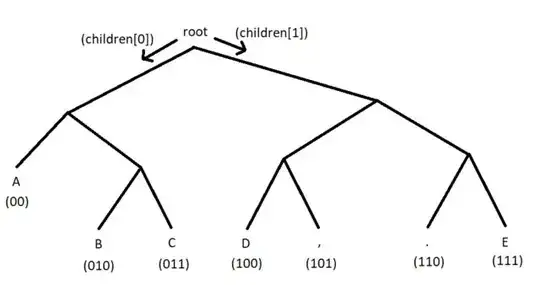

For reference, tree represents the following tree:

Using MSVC (Microsoft (R) C/C++ Optimizing Compiler Version 19.29.30138 for x64) I get the following output using either C++17 or C++20:

AAAAAAAAAAAAAAABBBBBBBC,.,.,.,.,.,.CCCCCDDDDDDEEEEE AAAAAAAAAAAAAAA*ADDDDDDE*.,.,.,.,.,.*,,,,.*ADDDDEEE

GCC (C++20 run on coliru, you may need to click edit in order to run) gave:

AAAAAAAAAAAAAAABBBBBBBC,.,.,.,.,.,.CCCCCDDDDDDEEEEE AAAAAAAAAAAAAAA�������C,.,.,.,.,.,.CCCCCDDDDDDEEEEE

Clang (C++17 also on coliru) gave the same result:

AAAAAAAAAAAAAAABBBBBBBC,.,.,.,.,.,.CCCCCDDDDDDEEEEE AAAAAAAAAAAAAAA�������C,.,.,.,.,.,.CCCCCDDDDDDEEEEE