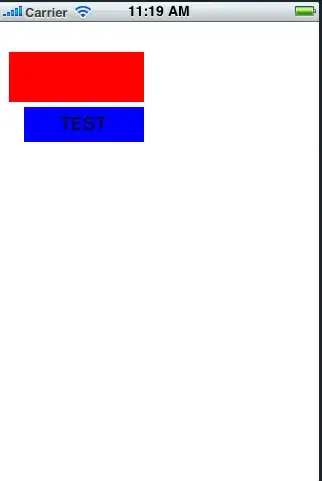

I have a depth map encoded in 24 bits (labeled "Original"). With the code below:

carla_img = cv.imread('carla_deep.png', flags=cv.IMREAD_COLOR)

carla_img = carla_img[:, :, :3]

carla_img = carla_img[:,:,::-1]

gray_depth = ((carla_img[:,:,0] + carla_img[:,:,1] * 256.0 + carla_img[:,:,2] * 256.0 * 256.0)/((256.0 * 256.0 * 256.0) - 1))

gray_depth = gray_depth * 1000

I am able to convert it as in the "Converted" image.

As shown here: https://carla.readthedocs.io/en/latest/ref_sensors/

How can I reverse this process (Without using any larger external libraries and using at most openCV)? In Python I create a depth map with the help of OpenCV. I wanted to save the obtained depth map in the form of Carla (24-bit).

This is how I create depth map:

imgL = cv.imread('leftImg.png',0)

imgR = cv.imread('rightImg.png',0)

stereo = cv.StereoBM_create(numDisparities=128, blockSize=17)

disparity = stereo.compute(imgL,imgR)

CameraFOV = 120

Focus_length = width /(2 * math.tan(CameraFOV * math.pi / 360))

camerasBaseline = 0.3

depthMap = (camerasBaseline * Focus_length) / disparity

How can I save the obtained depth map in the same form as in the picture marked "Original"?