In our company for orchestrating of running Databricks notebooks, experimentally we learned to connect our notebooks (affiliated to a git repository) to ADF pipelines, however, there is an issue.

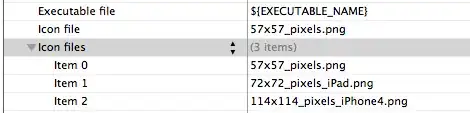

As you can see in the photo attached to this question path to the notebook depends on the employee username, which is not a stable solution at production.

What is/are the solution(s) to solve it?.

- update: The main issue is keeping employee username out of production to avoid any future failure. Either in path of ADF or secondary storage place which can be read by lookup but still sitting production side.