I am loading my training images into a PyTorch dataloader, and I need to calculate the input image's stats. The calculation is taken directly from https://kozodoi.me/python/deep%20learning/pytorch/tutorial/2021/03/08/image-mean-std.html.

T = transforms.Compose([

transforms.Resize(img_size),

transforms.ToTensor()

])

dataset = datasets.ImageFolder(train_dir, T)

image_loader = DataLoader(dataset=dataset, batch_size=1, shuffle=True, drop_last=True)

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

psum = torch.tensor([0.0, 0.0, 0.0]).to(device)

psum_sq = torch.tensor([0.0, 0.0, 0.0]).to(device)

for image, label in image_loader:

image = image.to(device)

psum += image.sum(axis=[0, 2, 3])

psum_sq += (image ** 2).sum(axis=[0, 2, 3])

count = len(image_loader.dataset) * img_size[0] * img_size[1]

total_mean = psum / count

total_var = (psum_sq / count) - (total_mean ** 2)

total_std = torch.sqrt(total_var)

Profiling revealed that the for loop is a bottleneck. How can I parallelize the operations? I have looked into Dask's delayed and got something like this.

for image, label in image_loader:

image = image.to(device)

psum += delayed(calculate_psum)(image)

psum_sq += delayed(calculate_psum_sq)(image)

count = delayed(calculate_count)(image_loader, img_size)

total_mean = delayed(calculate_mean)(psum, count)

total_var = delayed(calculate_var)(psum_sq, count, total_mean)

total_std = delayed(calculate_std)(total_var)

How should I parallelize each operation, and where should I call compute? I noticed that the total_x values have dependencies. Is that where parallelization is not possible?

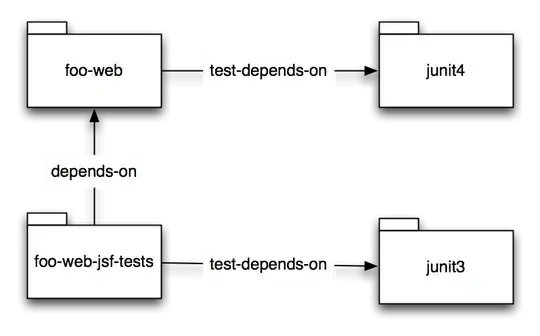

UPDATE: Here is a computation graph to see which part is easier to parallelize.