I'm a CUDA learning student and I'm trying to write a CUDA algorithm for counting sort:

__global__ void kernelCountingSort(int *array, int dim_array, int *counts) {

// define index

int i = blockIdx.x * blockDim.x + threadIdx.x;

int count = 0;

// check that the thread is not out of the vector boundary

if (i >= dim_array) return;

for (int j = 0; j < dim_array; ++j) {

if (array[j] < array[i])

count++;

else if (array[j] == array[i] && j < i)

count++;

}

counts[count] = array[i];

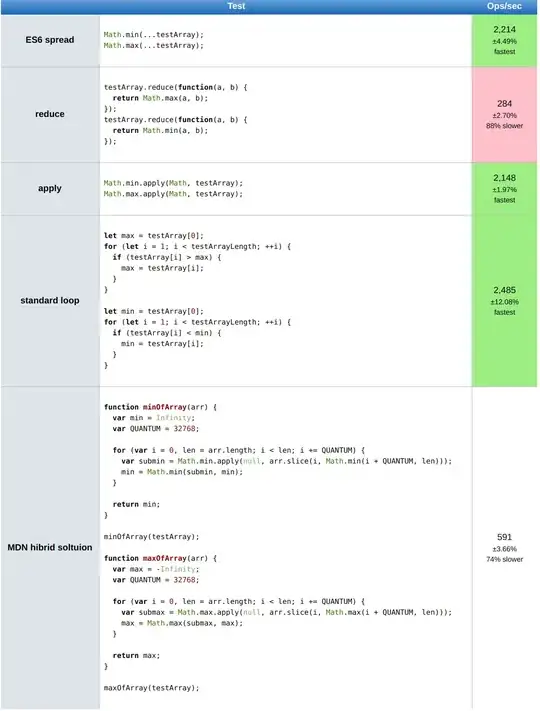

I tried to analyze my algorithm performances with increasing block size, that's the time graph with corrisponding block size:

With 64 as block size I have 100% of occupancy, however I achive the best performances, so the minumum execution time, with a 32 block size. I'm asking if it's possible to have better performances with less occupancy.