I am interfacing qvga sensor streaming out yuv2 format data through host application on windows (usb). How can I use any opencv-python example application to stream or capture raw data from yuv2 format.

How can I do that? Is there any test example to do so?

//opencv-python (host appl)

import cv2

import numpy as np

# open video0

cap = cv2.VideoCapture(0, cv2.CAP_MSMF)

# set width and height

cap.set(cv2.CAP_PROP_FRAME_WIDTH, 340)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 240)

# set fps

cap.set(cv2.CAP_PROP_FPS, 30)

while(True):

# Capture frame-by-frame

ret, frame = cap.read()

# Display the resulting frame

cv2.imshow('frame', frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# When everything done, release the capture

cap.release()

cv2.destroyAllWindows()

Code sample for grabbing video frames without decoding:

import cv2

import numpy as np

# open video0

# -------> Try replacing cv2.CAP_MSMF with cv2.CAP_FFMPEG):

cap = cv2.VideoCapture(0, cv2.CAP_FFMPEG)

# set width and height

cap.set(cv2.CAP_PROP_FRAME_WIDTH, 340)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 240)

# set fps

cap.set(cv2.CAP_PROP_FPS, 30)

# Fetch undecoded RAW video streams

cap.set(cv2.CAP_PROP_FORMAT, -1) # Format of the Mat objects. Set value -1 to fetch undecoded RAW video streams (as Mat 8UC1)

for i in range(10):

# Capture frame-by-frame

ret, frame = cap.read()

if not ret:

break

print('frame.shape = {} frame.dtype = {}'.format(frame.shape, frame.dtype))

cap.release()

In case cv2.CAP_FFMPEG is not working, try the following code sample:

import cv2

import numpy as np

# open video0

cap = cv2.VideoCapture(0, cv2.CAP_MSMF)

# set width and height

cap.set(cv2.CAP_PROP_FRAME_WIDTH, 340)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 240)

# set fps

cap.set(cv2.CAP_PROP_FPS, 30)

# -----> Try setting FOURCC and disable RGB conversion:

#########################################################

cap.set(cv2.CAP_PROP_FOURCC, cv2.VideoWriter.fourcc('Y','1','6',' '))

cap.set(cv2.CAP_PROP_CONVERT_RGB, 0)

#########################################################

# Fetch undecoded RAW video streams

cap.set(cv2.CAP_PROP_FORMAT, -1) # Format of the Mat objects. Set value -1 to fetch undecoded RAW video streams (as Mat 8UC1)

for i in range(10):

# Capture frame-by-frame

ret, frame = cap.read()

if not ret:

break

print('frame.shape = {} frame.dtype = {}'.format(frame.shape, frame.dtype))

cap.release()

Reshape the uint8 frame to 680x240 and save as img.png:

import cv2

import numpy as np

# open video0

cap = cv2.VideoCapture(0, cv2.CAP_MSMF)

# set width and height

cap.set(cv2.CAP_PROP_FRAME_WIDTH, 340)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 240)

cap.set(cv2.CAP_PROP_FPS, 30) # set fps

# Disable the conversion to BGR by setting FOURCC to Y16 and `CAP_PROP_CONVERT_RGB` to 0.

cap.set(cv2.CAP_PROP_FOURCC, cv2.VideoWriter.fourcc('Y','1','6',' '))

cap.set(cv2.CAP_PROP_CONVERT_RGB, 0)

# Fetch undecoded RAW video streams

cap.set(cv2.CAP_PROP_FORMAT, -1) # Format of the Mat objects. Set value -1 to fetch undecoded RAW video streams (as Mat 8UC1)

for i in range(10):

# Capture frame-by-frame

ret, frame = cap.read()

if not ret:

break

cols = 340*2

rows = 240

img = frame.reshape(rows, cols)

cv2.imwrite('img.png', img)

cap.release()

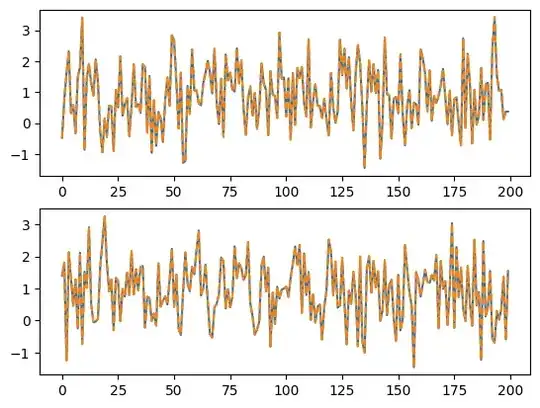

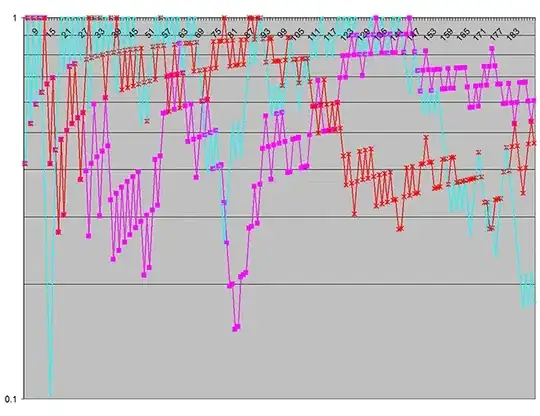

//in presence of hot object (img1.png)

//processed image (hot object)

//with little-endian (test)

//test image (captured) with CAP_DSHOW

//test image (saved) with CAP_DSHOW

//680x240 (hand.png)

//680x240 (hand1.png)

//fing preview

//fing.png

//fing.png