The purpose of this answer is two-fold:

- Demonstrate the (a?) proper postgres-style upsert action. I present a

pg_upsert function, and in that function I've included (prefixed with #'#) what the query looks like when finished. The query is formed dynamically, so does not need a priori knowledge of the fields other than the user-provided idfields= argument.

- Demonstrate how to react to

DT-edits using this function. This is one way and there are definitely other ways to formulate how to deal with the reactive DT. If you have a different style for keeping track of changes in the DT, then feel free to take pg_upsert and run with it!

Notes:

it does not update the database with each cell edit, the changes are "batched" until the user clicks the Upsert! button; it is feasible to change to "upsert on each cell", but that would be a relatively trivial query, no need for upserts

since you're using postgres, the target table must have one or more unique indices (see No unique or exclusion constraint matching the ON CONFLICT); I'll create the sample data and the index on said table; if you don't understand what this means and your data doesn't have a clear "id" field(s), then do what I did: add an id column (both locally and in the db) that sequences along your real rows (this won't work if your data is preexisting and has no id fields)

the id field(s) must not be editable, so the editable= part of DT disables changing that column; I included a query (found in https://stackoverflow.com/a/2213199/3358272) that will tell you these fields programmatically; if this returns nothing, then go back to the previous bullet and fix it

the pg_upsert function takes a few steps to ensure things are clean (i.e., checks for duplicate ids), but does not check for incorrect new-values (DT does some of this for you, by class I believe), I'll assume you are verifying what you need before sending for an upsert;

the return value from pg_upsert is logical, indicating that the upsert action updated as many rows as we expected; this might be overly aggressive, though I cannot think of an example when it would correctly return other than nrow(value); caveat emptor

I include an optional "dbout" table in the shiny layout solely to show the current state of the database data, updated every time pg_upsert is called (indirectly); if no changes have been made, it will still query to show the current state, and is therefore the best way to show the starting condition for your testing; again, it is optional. When you remove it (and you should) and nothing else uses the do_update() reactive, then change

do_update <- eventReactive(input$upbtn, ...)

output$dbout <- renderTable({ do_update(); ... })

to

observeEvent(input$upbtn, ...)

# output$dbout <- renderTable({ do_update(); ... })

(Otherwise, a reactive(.) block that is never used downstream will never fire, so your updates would not happen.)

This app queries the database for all values (into curdata), this is likely already being done in your case. This app also finds (programmatically) the required indices. If you know ahead of time what these are, feel free to drop the query that feeds idfields and just assign it directly (case-sensitive).

When the app exits, the user-edited data is not stored in the local R console/environment, all changes are stored in the database. It's my assumption that this will be formalized into a shiny-server, RStudio Connect, or similar production server, in which case "console" has little meaning. If you really need the user-changed data to be available on the local R console while you are developing your app, then in addition to using mydata reactive values, after mydata$data is reassigned you can overwrite curdata <<- mydata$data (note the double < in <<-). I discourage this practice in production but it might be useful while in development.

Here is a setup for sample data. It doesn't matter if you have 6 (as here) or 60 columns, the premise remains. (After this, origdata is not used, it was a throw-away to prep for this answer.)

# pgcon <- DBI::dbConnect(...)

set.seed(42)

origdata <- iris[sample(nrow(iris), 6),]

origdata$id <- seq_len(nrow(origdata))

# setup for this answer

DBI::dbExecute(pgcon, "drop table if exists mydata")

DBI::dbWriteTable(pgcon, "mydata", origdata)

# postgres upserts require 'unique' index on 'id'

DBI::dbExecute(pgcon, "create unique index mydata_id_idx on mydata (id)")

Here is the UPSERT function itself, broken out to facilitate testing, console evaluation, and similar.

#' @param value 'data.frame', values to be updated, does not need to

#' include all columns in the database

#' @param name 'character', the table name to receive the updated

#' values

#' @param idfields 'character', one or more id fields that are present

#' in both the 'value' and the database table, these cannot change

#' @param con database connection object, from [DBI::dbConnect()]

#' @param verbose 'logical', be verbose about operation, default true

#' @return logical, whether 'nrow(value)' rows were affected; if an

#' error occurred, it is messaged to the console and a `FALSE` is

#' returned

pg_upsert <- function(value, name, idfields, con = NULL, verbose = TRUE) {

if (verbose) message(Sys.time(), " upsert ", name, " with ", nrow(value), " rows")

if (any(duplicated(value[idfields]))) {

message("'value' contains duplicates in the idfields, upsert will not work")

return(FALSE)

}

tmptable <- paste(c("uptemp_", name, "_", sample(1e6, size = 1)), collapse = "")

on.exit({

DBI::dbExecute(con, paste("drop table if exists", tmptable))

}, add = TRUE)

DBI::dbWriteTable(con, tmptable, value)

cn <- colnames(value)

quotednms <- DBI::dbQuoteIdentifier(con, cn)

notid <- DBI::dbQuoteIdentifier(con, setdiff(cn, idfields))

qry <- sprintf(

"INSERT INTO %s ( %s )

SELECT %s FROM %s

ON CONFLICT ( %s ) DO

UPDATE SET %s",

name, paste(quotednms, collapse = " , "),

paste(quotednms, collapse = " , "), tmptable,

paste(DBI::dbQuoteIdentifier(con, idfields), collapse = " , "),

paste(paste(notid, paste0("EXCLUDED.", notid), sep = "="), collapse = " , "))

#'# INSERT INTO mydata ( "Sepal.Length" , "Petal.Length" )

#'# SELECT "Sepal.Length" , "Petal.Length" , "id" FROM mydata

#'# ON CONFLICT ( "id" ) DO

#'# UPDATE SET "Sepal.Length"=EXCLUDED."Sepal.Length" , "Petal.Length"=EXCLUDED."Petal.Length"

# dbExecute returns the number of rows affected, this ensures we

# return a logical "yes, all rows were updated" or "no, something

# went wrong"

res <- tryCatch(DBI::dbExecute(con, qry), error = function(e) e)

if (inherits(res, "error")) {

msg <- paste("error upserting data:", conditionMessage(res))

message(Sys.time(), " ", msg)

ret <- FALSE

attr(ret, "error") <- conditionMessage(res)

} else {

ret <- (res == nrow(value))

if (!ret) {

msg <- paste("expecting", nrow(value), "rows updated, returned", res, "rows updated")

message(Sys.time(), " ", msg)

attr(ret, "error") <- msg

}

}

ret

}

Here's the shiny app. When you source this, you can immediately press Upsert! to get the current state of the database table (again, only an option, not required for production), no updated values are needed to requery.

library(shiny)

library(DT)

pgcon <- DBI::dbConnect(...) # fix this incomplete expression

curdata <- DBI::dbGetQuery(pgcon, "select * from mydata order by id")

# if you don't know the idfield(s) offhand, then use this:

idfields <- DBI::dbGetQuery(pgcon, "

select

t.relname as table_name,

i.relname as index_name,

a.attname as column_name

from

pg_class t,

pg_class i,

pg_index ix,

pg_attribute a

where

t.oid = ix.indrelid

and i.oid = ix.indexrelid

and a.attrelid = t.oid

and a.attnum = ANY(ix.indkey)

and t.relkind = 'r'

and t.relname = 'mydata'

order by

t.relname,

i.relname;")

idfieldnums <- which(colnames(curdata) %in% idfields$column_name)

shinyApp(

ui = fluidPage(

DTOutput("tbl"),

actionButton("upbtn", "UPSERT!"),

tableOutput("dbout")

),

server = function(input, output) {

mydata <- reactiveValues(data = curdata, changes = NULL)

output$tbl = renderDT(

mydata$data, options = list(lengthChange = FALSE),

editable = list(target = "cell", disable = list(columns = idfields)))

observeEvent(input$tbl_cell_edit, {

mydata$data <- editData(mydata$data, input$tbl_cell_edit)

mydata$changes <- rbind(

if (!is.null(mydata$changes)) mydata$changes,

input$tbl_cell_edit

)

# keep the most recent change to the same cell

dupes <- rev(duplicated(mydata$changes[rev(seq(nrow(mydata$changes))),c("row","col")]))

mydata$changes <- mydata$changes[!dupes,]

message(Sys.time(), " pending changes: ", nrow(mydata$changes))

})

do_update <- eventReactive(input$upbtn, {

if (isTRUE(nrow(mydata$changes) > 0)) {

# always include the 'id' field(s)

# idcol <- which(colnames(mydata$data) == "id")

updateddata <- mydata$data[ mydata$changes$row, c(mydata$changes$col, idfieldnums) ]

res <- pg_upsert(updateddata, "mydata", idfields = "id", con = pgcon)

# clear the stored changes only if the upsert was successful

if (res) mydata$changes <- mydata$changes[0,]

}

input$upbtn

})

output$dbout <- renderTable({

do_update() # react when changes are attempted, the button is pressed

message(Sys.time(), " query 'mydata'")

DBI::dbGetQuery(pgcon, "select * from mydata order by id")

})

}

)

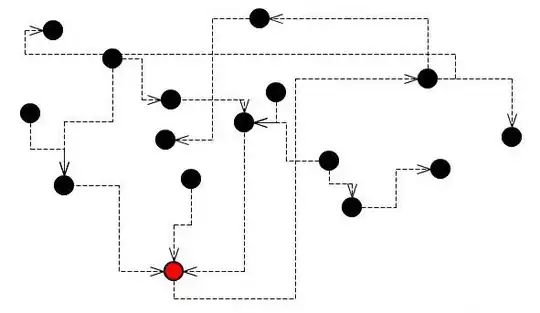

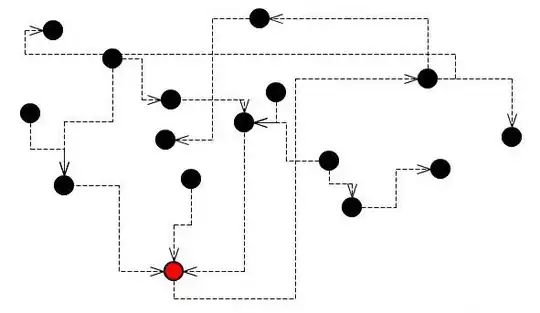

In action:

- (Left) When we start, we see the original

DT and no database output.

- (Middle) Press the

Upsert! button just to query the db and show the optional table.

- (Right) Make updates, then press

Upsert!, and the database is updated (and the lower table re-queried).