I have a Brain MRI. It is gray scale with 20 slices. I put it into a numpy array with shape (20,256,256). I use scipy.ndimage affine_transform to rotate and resample the array as below.

The dark stipes in the image is the artifact that I want reduce. The artifact is caused by the relatively large gap between the slices. In this example the pixel spacing is 0.85 mm, but the distance between slices is 7 mm.

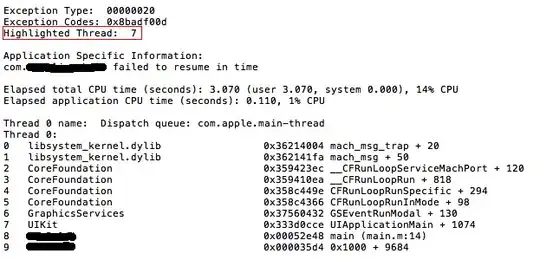

I tried to change the order of the affine transform, but even order=5 has the same artifacts. Below is order=0 (nearest neighbor)...

and you can see how the curvature of the skull is compounding the problem. Are there any tricks to fix this? Maybe I should add dummy data between the pixels to equalize the spacing? Maybe I should use polar coordinates to eliminate the curvature? Any other ideas?