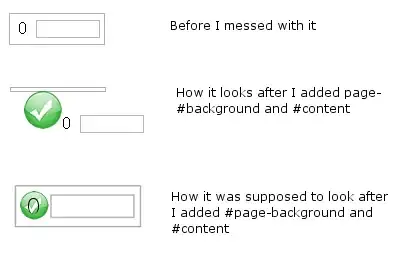

I'm currently working on a project in Julia where I am starting with an input beta which is assumed to be incorrect. I'm running through a sequence of code that updates this beta to be the correct value and checking the error. As beta gets larger, I expect this error to reach 100%. This code ultimately does a minimization of some parameter chi which is why I've chosen to employ the optimize function from Optim.jl. The output I'm getting is below.

When I perform this calculation by hand (using 1st and 2nd derivative to update) I get this

I see that this still has some numerical instability, but it holds up longer than the Optim way does. I would expect it to behave the other way around. My optimize function is set up as

result = optimize(β -> TEfunc(E,nc,onecut,β,pcutoff,μcutoff,N),β/2,2.2*β,Brent(),abs_tol=tempcutoff,rel_tol=sqrt(tempcutoff))

βstar=Optim.minimizer(result)

Is there an argument that I'm missing in the optimize call? I just want to figure out why I have numerical instability so quickly.