Following up on your comment from other question here are some things you could try. Some combinations of ideas below should help.

Image Embedding and Vector Clustering

Manual

Use a pretrained network such as resnet on imagenet (may not work good) or a simple pretrained network trained on MNIST/EMNIST.

Extract and concat some layers flattened weight vectors toward end of network. Apply dimensionality reduction and apply nearest neighbor/approximate nearest neighbor algorithms to find closest matches. Set number of clusters 3 as you have 3 types of images.

For nearest neighbor start with KNN. There are also many libraries in github that may help such as faiss, annoy etc.

More can be found from,

https://github.com/topics/nearest-neighbor-search

https://github.com/topics/approximate-nearest-neighbor-search

If result of above is not good enough try finetuning only last few layers MNIST/EMNIST trained network.

Using Existing Libraries

For grouping/finding similar images look into,

https://github.com/jina-ai/jina

You should be able to find more similarity clustering using tags neural-search, image-search on github.

https://github.com/topics/neural-search

https://github.com/topics/image-search

OCR

- Try easyocr as it worked better for me than tesserect last time used ocr.

- Run it first on whole document to see if requirements met.

- Use not so tight cropping instead some/large padding around text if possible with no other text nearby. Another way is try padding in all direction in tight cropped text to see if it improves ocr result.

- For tesserect see if tools mentioned in improving quality doc helps.

Classification

Noise Removal

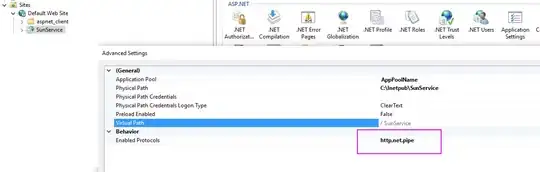

Here also you can go with a neural network. Train a denoising autoencoder with Category 1 images, corrupted type 1 images by adding noise that mimicks Category 2 and Category 3 images. This way the neural network will classify the 3 image categories without needing manually create dataset and in post processing you can use another neural network or image processing method to remove noise based on category type.  Image from, https://keras.io/examples/vision/autoencoder/

Image from, https://keras.io/examples/vision/autoencoder/

Try existing libraries or pretrained networks on github to remove noise in the whole document/cropped region. Look into rembg if it works on text documents.