I'm new to SwiftUI - not new to iOS development.

I have a lot of custom design/drawing to do and that seems particularly difficult in SwiftUI.

Please bear with me and prepare for a long one.

What's great about SwiftUI is that it's based on composition and you automagically get auto sizing and fitting to different devices.

But what is troubling me it that as soon as you have to size things relative to each other, you quickly have to turn to GeometryReader. And GeometryReader seems to be very contradictionary to the sizing concepts in SwiftUI.

Opposite to Autolayout constrains, where you easily can add relative constraints of dependency on other views or superviews, SwiftUI doesn't seem to do this so easily.

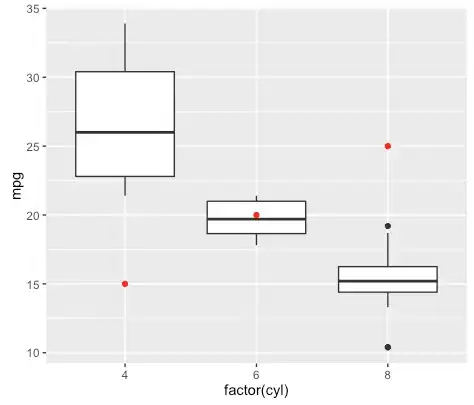

Lets say I want to add a view as overlay of an image, making sure the overlay always will have a relative padding of the superview - like margins in percentages.

The topY, bottomY, leadingX and trailingX are relative to the way the super view resizes, so that the content view will always fit in the "frame".

(This is a simplified version - consider the "frame" to be more that just boxes.)

The topY, bottomY, leadingX and trailingX are relative to the way the super view resizes, so that the content view will always fit in the "frame".

(This is a simplified version - consider the "frame" to be more that just boxes.)

In Autolayout I would just add the overview and then constraint the overlay vidth to the superview width, with a factor. In that way the padding of the overlay will follow along as the superview resizes.

Doing this in SwiftUI will have you to wrap the base view in a GeometryReader (which by it selv causes the entire view to fill the whole screen and change the coordinate system). The issue at hand is that when adding an overlay in SwiftUI, this works the same way as adding a view on top in UIKit. Now there is two different ways to make the overlay relative. Both involves finite pixel coordinates - which is my main complaint here. As soon as you enter the world of GeometryReader, you enter the world of fixed pixel based positioning and loos all the good parts of the automatic fitting in SwiftUI.

So, to make the overlay padding relative to the superview I can either:

A) Add .padding with EdgeInsets(), where edge insets is calculated by a factor of the super view size.

B) Use .offset() and .frame() to offset the position and size of the overlay by a calculated factor relative to the superview size.

This is pretty simple when just using rectangles:

struct RelativeView: View {

var body: some View {

GeometryReader { geo in

Rectangle() // Superview

.foregroundColor(Color.blue)

.overlay(Rectangle() // Relative overview (content view)

.foregroundColor(Color.yellow)

.padding(EdgeInsets(top: geo.size.height/4, leading: (geo.size.width*0.25)/2, bottom: geo.size.height/4, trailing: (geo.size.width*0.25)/2))

.overlay(Text("Yellow overlay must be 1/2 height and 3/4 width of the blue"))

)

}

}

}

The real problem starts when the super view is a Image that scales to .fit:

struct RelativeView: View {

var body: some View {

GeometryReader { geo in

let realImageWidth = CGFloat(4032)

let viewWidth = geo.size.width

let relativeWidthFactor = viewWidth/realImageWidth

let leadingX = CGFloat(1110)

let trailingX = CGFloat(750)

let topY = CGFloat(770)

let bottomY = CGFloat(936)

Image("TestBackground")

.resizable()

.aspectRatio(contentMode: .fit) // Superview

.overlay(Rectangle() // Relative overview (content view)

.foregroundColor(Color.yellow)

.padding(EdgeInsets(top: topY * relativeWidthFactor, leading: leadingX * relativeWidthFactor, bottom: bottomY * relativeWidthFactor, trailing: trailingX * relativeWidthFactor))

.overlay(Text("Yellow overlay must be 1/2 height and 3/4 width of the blue"))

)

}

}

}

Please note that the center text that is not properly centered is caused by padding not being respected by Rectangles. If I was wrapping the yellow Rectangle in a separate View, it would be centered in the yellow box instead of the super. (Don't get me started on that...)

The problems start to surface when I then use this View in another view. Then the GeometryReader starts to mess with the dynamic SwiftUI auto-world.

import SwiftUI

struct GrainCart3dView: View {

var body: some View {

GeometryReader { geo in

VStack {

Rectangle().foregroundColor(.green)

RelativeContainerView()

}

}

}

}

struct RelativeContainerView: View {

var body: some View {

GeometryReader { geo in

let realImageWidth = CGFloat(4032)

let viewWidth = geo.size.width

let relativeWidthFactor = viewWidth/realImageWidth

let leadingX = CGFloat(1110)

let trailingX = CGFloat(750)

let topY = CGFloat(770)

let bottomY = CGFloat(936)

Image("TestBackground")

.resizable()

.aspectRatio(contentMode: .fit) // Superview

.overlay(RelativeContentView() // Relative overview (content view)

.padding(EdgeInsets(top: topY * relativeWidthFactor, leading: leadingX * relativeWidthFactor, bottom: bottomY * relativeWidthFactor, trailing: trailingX * relativeWidthFactor))

)

}

}

}

struct RelativeContentView: View {

var body: some View {

Rectangle()

.foregroundColor(.yellow)

.overlay(Text("Yellow overlay must be 1/2 height and 3/4 width of the blue"))

}

}

On iPad in landscape the even distribution results in the frame view not filling the entire width and since the frame padding is calculated based on the width, it's now wrong.

Where is the content view now on the iPad?

The VStack distributes the two view evenly, but let's say I want to fit the frame-view in width and maintain aspect and then fit the green view to to the remaining.

In Autolayout I could just set priority of the frame-view, but thats not how it works with SwiftUI. My only way with SwiftUI is to wrap it in yet another super view with yet another GeomotryReader mathematically setting a calculated relationship between the green- and the frame views. And even worse, I have to se sizes on both - there is no way to set the size of one and "fill the gap" automatically with the second.

My issue at hand here is that it seems like the sizing and positioning using GeometryReader seems very tied to pixels and very far from the relative and dynamic concept of SwiftUI.

So, to clarify what I'm looking for is making a frame where the padding scales relatively to the super view size while distributing it vertically unevenly.

How do I approach this in a better and more generic way?