I have the following text and want to isolate a part of the sentence related to a keyword, in this case keywords = ['pizza', 'chips'].

text = "The pizza is great but the chips aren't the best"

Expected Output:

{'pizza': 'The pizza is great'}

{'chips': "the chips aren't the best"}

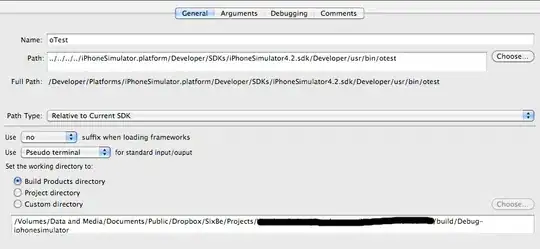

I have tried using the Spacy Dependency Matcher but admittedly I'm not quite sure how it works. I tried the following pattern for chips which yields no matches.

import spacy

from spacy.matcher import DependencyMatcher

nlp = spacy.load("en_core_web_sm")

pattern = [

{

"RIGHT_ID": "chips_id",

"RIGHT_ATTRS": {"ORTH": "chips"}

},

{

"LEFT_ID": "chips_id",

"REL_OP": "<<",

"RIGHT_ID": "other_words",

"RIGHT_ATTRS": {"POS": '*'}

}

]

matcher = DependencyMatcher(nlp.vocab)

matcher.add("chips", [pattern])

doc = nlp("The pizza is great but the chips aren't the best")

for id_, (_, other_words) in matcher(doc):

print(doc[other_words])

Edit:

Additional example sentences:

example_sentences = [

"The pizza's are just OK, the chips is stiff and the service mediocre",

"Then the mains came and the pizza - these we're really average - chips had loads of oil and was poor",

"Nice pizza freshly made to order food is priced well, but chips are not so keenly priced.",

"The pizzas and chips taste really good and the Tango Ice Blast was refreshing"

]