I have a script in AWS Glue ETL Job, where it reads a S3 bucket with a lot of parquet files, do a sort by key1, key2 and a timestamp field. After that the script delete the duplicates and save a single parquet file in other S3 Bucket.

Look the data I have before the Job runs:

| key1 | key2 | uploadTimestamp |

|---|---|---|

| 0005541779 | 10 | 2021-12-29 14:54:08.753 |

| 0005541779 | 10 | 2021-12-29 15:06:05.968 |

The code that do the sort and eliminate duplicates:

#############################################################

tempDF = S3bucket_node1.toDF() #from Dynamic Frame to Data Frame

sortedDF = tempDF.orderBy(f.col("uploadTimestamp").desc(),"key1","key2").dropDuplicates(["key1","key2"]) #sort and remove duplicates

dynamicFrame = DynamicFrame.fromDF(sortedDF, glueContext, 'salesOrder') #back to Dynamic Frame

#############################################################

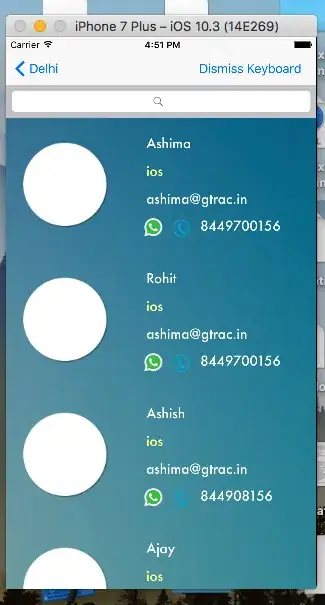

Get a look on this image after an order by:

My problem: In the output file, some data got the last timestamp, some data got the first.. I can't understand why it doesnt work for all data.

Thanks.