I am doing a multiple regression test with statsmodels.

I am very confident that there is a relationship in the data, both from what I already know about this data through other sources and from plotting, but when I do a multiple regression test with statsmodels, the p-value is shown as 0.000. My interpretation of low p-values is that there is no relation. However, a value of 0.000 seems more like something has failed computationally, because I would assume that statistical noise alone would give me a low p-value of at least 0.1.

What could be the reason for a multiple regression test that computes without errors but gives a p-value of 0.000 when there is clearly a relationship in the data?

EDIT:

I am not sure if this is a statistical or a code problem. It would therefore be really helpful if people with experience woth statsmodels could tell me whether I used it correctly. If there is consensus about this being a data-related problem I would close this question here and reopen it on Cross Validated as suggested in a comment

In the below image I have plotted the independent variable against the dependent one. I think this shows that there is some kind of relationship there:

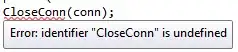

But when I do a multiple regression test:

But when I do a multiple regression test:

import statsmodels.api as sm

df = df.dropna()

Y = df['share_yes']

X = df[[

'party_percent',

]]

X = sm.add_constant(X)

ks = sm.OLS(Y, X)

ks_res = ks.fit()

ks_res.summary()

print(ks_res.summary())

... the p-value is shown as 0.000:

OLS Regression Results

==============================================================================

Dep. Variable: share_yes R-squared: 0.504

Model: OLS Adj. R-squared: 0.504

Method: Least Squares F-statistic: 2288.

Date: Mon, 27 Dec 2021 Prob (F-statistic): 0.00

Time: 13:41:57 Log-Likelihood: 2152.1

No. Observations: 2256 AIC: -4300.

Df Residuals: 2254 BIC: -4289.

Df Model: 1

Covariance Type: nonrobust

=================================================================================

coef std err t P>|t| [0.025 0.975]

---------------------------------------------------------------------------------

const 0.4296 0.004 103.536 0.000 0.421 0.438

party_percent 1.2539 0.026 47.831 0.000 1.202 1.305

==============================================================================

Omnibus: 10.487 Durbin-Watson: 0.931

Prob(Omnibus): 0.005 Jarque-Bera (JB): 10.492

Skew: -0.166 Prob(JB): 0.00527

Kurtosis: 3.044 Cond. No. 13.6

==============================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

This is what my pandas dataframe looks like:

unique_district party_percent share_yes

0 1100 0.089320 0.588583

1 1101 0.099448 0.505556

2 1102 0.146040 0.545226

3 1103 0.094512 0.496875

4 1104 0.136538 0.513672

... ... ... ...

2252 12622 0.040000 0.274827

2253 12623 0.038660 0.322917

2254 12624 0.016453 0.439539

2255 12625 0.060952 0.386774

2256 12626 0.032882 0.306452

Please note that I am actally using more than one variable, therefore multiple regression, but for the sake of brevity I only used one here.