While writing this question, I managed to find an explaination. But as it seems a tricky point, I will post it and answer it anyway. Feel free to complement.

I have what appears to me an inconsistent behaviour of pyspark, but as I am quite new to it I may miss something... All my steps are run in an Azure Databricks notebook, and the data is from a parquet file hosted in Azure Datalake Gen. 2.

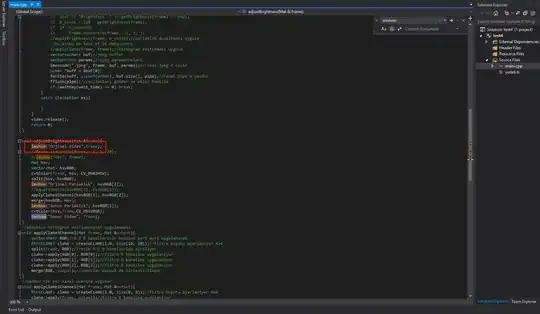

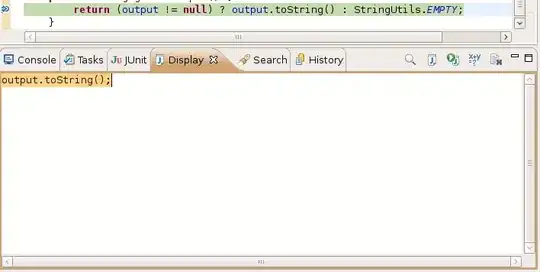

I want to simply filter the NULL records from a spark dataframe, created by reading a parquet file, with the following steps:

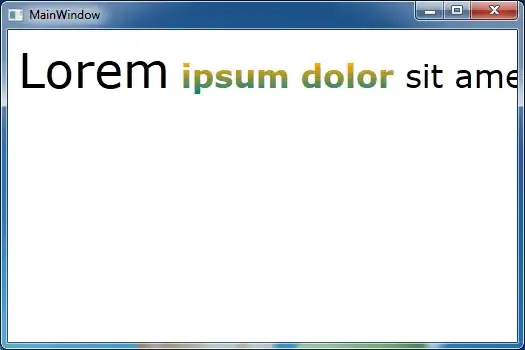

Filtering on the phone column just works fine:

We can see that at least some contact_tech_id values are also missing. But when filtering on this specific column, an empty dataframe is retrieved...

Is there any explaination on why this could happen, or what I should look for?