I have compiled the newest available OpenCV 4.5.4 version for use with the newest CUDA 11.5 with fast math enabled running on a Windows 10 machine with a GeForce RTX 2070 Super graphics card (7.5 arch). I'm using Python 3.8.5.

Runtime results:

- CPU outperforms GPU (matching a 70x70 needle image in a 300x300 source image)

- biggest GPU bottleneck is the need to upload the files to the GPU before template matching

- CPU takes around 0.005 seconds while the GPU takes around 0.42 seconds

- Both methods end up finding a 100% match

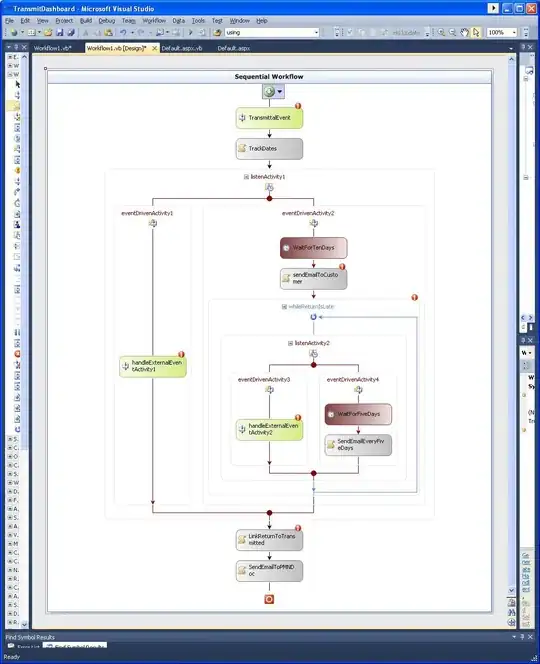

Images used:

Python code using CPU:

import cv2

import time

start_time = time.time()

src = cv2.imread("cat.png", cv2.IMREAD_GRAYSCALE)

needle = cv2.imread("needle.png", 0)

result = cv2.matchTemplate(src, needle, cv2.TM_CCOEFF_NORMED)

min_val, max_val, min_loc, max_loc = cv2.minMaxLoc(result)

print("CPU --- %s seconds ---" % (time.time() - start_time))

Python code using GPU:

import cv2

import time

start_time = time.time()

src = cv2.imread("cat.png", cv2.IMREAD_GRAYSCALE)

needle = cv2.imread("needle.png", 0)

gsrc = cv2.cuda_GpuMat()

gtmpl = cv2.cuda_GpuMat()

gresult = cv2.cuda_GpuMat()

upload_time = time.time()

gsrc.upload(src)

gtmpl.upload(needle)

print("GPU Upload time --- %s seconds ---" % (time.time() - upload_time))

match_time = time.time()

matcher = cv2.cuda.createTemplateMatching(cv2.CV_8UC1, cv2.TM_CCOEFF_NORMED)

gresult = matcher.match(gsrc, gtmpl)

print("GPU Match time --- %s seconds ---" % (time.time() - match_time))

result_time = time.time()

resultg = gresult.download()

min_valg, max_valg, min_locg, max_locg = cv2.minMaxLoc(resultg)

print("GPU Result time --- %s seconds ---" % (time.time() - result_time))

print("GPU --- %s seconds ---" % (time.time() - start_time))

Even if I wouldn't take the time it takes to upload the files to the GPU into consideration the matching time alone takes more than 10x of the whole process on the CPU. My CUDA is installed correctly, I have run other tests where the GPU outperformed the CPU by a lot, but the results for template matching are really disappointing so far.

Why is the GPU performing so badly?