I'm trying to create a random float generator (range of 0.0-1.0), where I can supply a single target value, and a strength value that increases or decreases the chance that this target will be hit. For example, if my target is 0.7, and I have a high strength value, I would expect the function to return mostly values around 0.7.

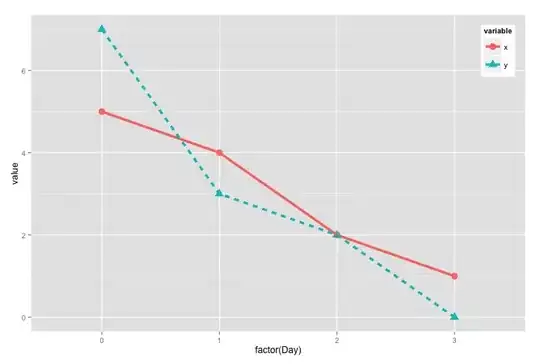

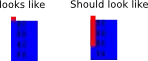

Put another way, I want a function that, when run a lot of times, would produce a distribution graph something like this:

Something like a bell curve, yes, but with a strict range limit (instead of the -inf/+inf range limit of a normal distribution). Clamping a normal distribution is not ideal, I want the distribution to naturally end at the range limits.

The approach I've been attempting is to come up with a formula to transform a value from uniform distribution to the mythical distribution I'm envisioning. Something like an inverse sine:

with the ability to widen out that middle point, via the strength value:

and also the ability to move that midpoint up and down, via the target value:

Target changed to 0.7 (courtesy of MS Paint because I couldn't figure this part out mathematically)

The range of this theoretical "strength value" is up for debate. I could imagine either a limited value, say between 0 and 1, where 0 means it's uniform distribution and 1 means it's a 100% chance of hitting the target; or, I could imagine a value that approaches a 100% chance the higher it gets, without ever reaching it. Something along either line would work.

I'm working in C# but this can be language-agnostic. Any help pointing me in the right direction is appreciated. Also happy to clarify further.