I am practicing conv1D on TensorFlow 2.7, and I am checking a decoder I developed by checking if it will overfit one example. The model doesn't learn when trained on only one example and can't overfit this one example. I want to understand this strange behavior, please. This is the link to the notebook on colab Notebook.

import tensorflow as tf

from tensorflow.keras.layers import Input, Conv1D, Dense, BatchNormalization

from tensorflow.keras.layers import ReLU, MaxPool1D, GlobalMaxPool1D

from tensorflow.keras import Model

import numpy as np

def Decoder():

inputs = Input(shape=(68, 3), name='Input_Tensor')

# First hidden layer

conv1 = Conv1D(filters=64, kernel_size=1, name='Conv1D_1')(inputs)

bn1 = BatchNormalization(name='BN_1')(conv1)

relu1 = ReLU(name='ReLU_1')(bn1)

# Second hidden layer

conv2 = Conv1D(filters=64, kernel_size=1, name='Conv1D_2')(relu1)

bn2 = BatchNormalization(name='BN_2')(conv2)

relu2 = ReLU(name='ReLU_2')(bn2)

# Third hidden layer

conv3 = Conv1D(filters=64, kernel_size=1, name='Conv1D_3')(relu2)

bn3 = BatchNormalization(name='BN_3')(conv3)

relu3 = ReLU(name='ReLU_3')(bn3)

# Fourth hidden layer

conv4 = Conv1D(filters=128, kernel_size=1, name='Conv1D_4')(relu3)

bn4 = BatchNormalization(name='BN_4')(conv4)

relu4 = ReLU(name='ReLU_4')(bn4)

# Fifth hidden layer

conv5 = Conv1D(filters=1024, kernel_size=1, name='Conv1D_5')(relu4)

bn5 = BatchNormalization(name='BN_5')(conv5)

relu5 = ReLU(name='ReLU_5')(bn5)

global_features = GlobalMaxPool1D(name='GlobalMaxPool1D')(relu5)

global_features = tf.keras.layers.Reshape((1, -1))(global_features)

conv6 = Conv1D(filters=12, kernel_size=1, name='Conv1D_6')(global_features)

bn6 = BatchNormalization(name='BN_6')(conv6)

outputs = ReLU(name='ReLU_6')(bn6)

model = Model(inputs=[inputs], outputs=[outputs], name='Decoder')

return model

model = Decoder()

model.summary()

optimizer = tf.keras.optimizers.Adam(learning_rate=0.1)

losses = tf.keras.losses.MeanSquaredError()

model.compile(optimizer=optimizer, loss=losses)

n = 1

X = np.random.rand(n, 68, 3)

y = np.random.rand(n, 1, 12)

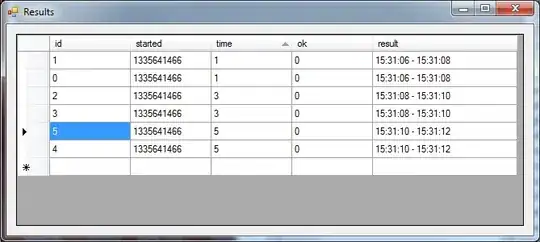

model.fit(x=X,y=y, verbose=1, epochs=30)