Am in very early stages of exploring Argo with Spark operator to run Spark samples on the minikube setup on my EC2 instance.

Following are the resources details, not sure why am not able to see the spark app logs.

WORKFLOW.YAML

kind: Workflow

metadata:

name: spark-argo-groupby

spec:

entrypoint: sparkling-operator

templates:

- name: spark-groupby

resource:

action: create

manifest: |

apiVersion: "sparkoperator.k8s.io/v1beta2"

kind: SparkApplication

metadata:

generateName: spark-argo-groupby

spec:

type: Scala

mode: cluster

image: gcr.io/spark-operator/spark:v3.0.3

imagePullPolicy: Always

mainClass: org.apache.spark.examples.GroupByTest

mainApplicationFile: local:///opt/spark/spark-examples_2.12-3.1.1-hadoop-2.7.jar

sparkVersion: "3.0.3"

driver:

cores: 1

coreLimit: "1200m"

memory: "512m"

labels:

version: 3.0.0

executor:

cores: 1

instances: 1

memory: "512m"

labels:

version: 3.0.0

- name: sparkling-operator

dag:

tasks:

- name: SparkGroupBY

template: spark-groupby

ROLES

# Role for spark-on-k8s-operator to create resources on cluster

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: spark-cluster-cr

labels:

rbac.authorization.kubeflow.org/aggregate-to-kubeflow-edit: "true"

rules:

- apiGroups:

- sparkoperator.k8s.io

resources:

- sparkapplications

verbs:

- '*'

---

# Allow airflow-worker service account access for spark-on-k8s

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: argo-spark-crb

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: spark-cluster-cr

subjects:

- kind: ServiceAccount

name: default

namespace: argo

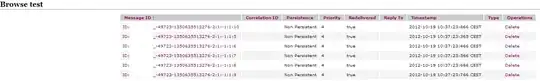

ARGO UI

To dig deep I tried all the steps that's listed on https://dev.to/crenshaw_dev/how-to-debug-an-argo-workflow-31ng yet could not get app logs.

Basically when I run these examples am expecting spark app logs to be printed - in this case output of following Scala example

Interesting when I list PODS, I was expecting to see driver pods and executor pods but always see only one POD and it has above logs as in the image attached. Please help me to understand why logs are not generated and how can I get it?

RAW LOGS

$ kubectl logs spark-pi-dag-739246604 -n argo

time="2021-12-10T13:28:09.560Z" level=info msg="Starting Workflow Executor" version="{v3.0.3 2021-05-11T21:14:20Z 02071057c082cf295ab8da68f1b2027ff8762b5a v3.0.3 clean go1.15.7 gc linux/amd64}"

time="2021-12-10T13:28:09.581Z" level=info msg="Creating a docker executor"

time="2021-12-10T13:28:09.581Z" level=info msg="Executor (version: v3.0.3, build_date: 2021-05-11T21:14:20Z) initialized (pod: argo/spark-pi-dag-739246604) with template:\n{\"name\":\"sparkpi\",\"inputs\":{},\"outputs\":{},\"metadata\":{},\"resource\":{\"action\":\"create\",\"manifest\":\"apiVersion: \\\"sparkoperator.k8s.io/v1beta2\\\"\\nkind: SparkApplication\\nmetadata:\\n generateName: spark-pi-dag\\nspec:\\n type: Scala\\n mode: cluster\\n image: gjeevanm/spark:v3.1.1\\n imagePullPolicy: Always\\n mainClass: org.apache.spark.examples.SparkPi\\n mainApplicationFile: local:///opt/spark/spark-examples_2.12-3.1.1-hadoop-2.7.jar\\n sparkVersion: 3.1.1\\n driver:\\n cores: 1\\n coreLimit: \\\"1200m\\\"\\n memory: \\\"512m\\\"\\n labels:\\n version: 3.0.0\\n executor:\\n cores: 1\\n instances: 1\\n memory: \\\"512m\\\"\\n labels:\\n version: 3.0.0\\n\"},\"archiveLocation\":{\"archiveLogs\":true,\"s3\":{\"endpoint\":\"minio:9000\",\"bucket\":\"my-bucket\",\"insecure\":true,\"accessKeySecret\":{\"name\":\"my-minio-cred\",\"key\":\"accesskey\"},\"secretKeySecret\":{\"name\":\"my-minio-cred\",\"key\":\"secretkey\"},\"key\":\"spark-pi-dag/spark-pi-dag-739246604\"}}}"

time="2021-12-10T13:28:09.581Z" level=info msg="Loading manifest to /tmp/manifest.yaml"

time="2021-12-10T13:28:09.581Z" level=info msg="kubectl create -f /tmp/manifest.yaml -o json"

time="2021-12-10T13:28:10.348Z" level=info msg=argo/SparkApplication.sparkoperator.k8s.io/spark-pi-daghhl6s

time="2021-12-10T13:28:10.348Z" level=info msg="Starting SIGUSR2 signal monitor"

time="2021-12-10T13:28:10.348Z" level=info msg="No output parameters"