To scrape Google Arts and Culture you can only use BeautifulSoup web scraping library. However, we need to take into account the fact that the page is dynamic and change a strategy from parsing HTML elements (CSS selectors, etc.) to parsing data with regular expressions.

We need regular expressions because the information we need comes from the server and stores as inline JSON which is used to render via JavaScript (guess). First of all, we need to look at the page code (CTRL + U) to find matches and, if so, look where they're exactly.

Since information about three tabs (All, A-Z, Time) is returned to us at once, we need to select part of JSON that returns information about the "Time" tab using regular expressions to find matches and extract them. For example, author, link to the author, and number of paintings.

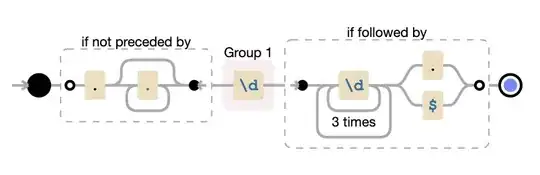

Here's an example regular expression that extracts part of the inline JSON that contains data from the "Time" tab:

# https://regex101.com/r/4XAQ49/1

portion_of_script_tags = re.search("\[\"stella\.pr\",\"DatedAssets:.*\",\[\[\"stella\.common\.cobject\",(.*?)\[\]\]\]\;<\/script>", str(all_script_tags)).group(1)

Also need to pay attention because the request might be blocked (if using requests as default user-agent in requests library is a python-requests. Additional step could be to rotate user-agent, for example, to switch between PC, mobile, and tablet, as well as between browsers e.g. Chrome, Firefox, Safari, Edge and so on.

A code snippet that extracts 54 authors and code in the online IDE.

from bs4 import BeautifulSoup

import requests, json, re, lxml

# https://requests.readthedocs.io/en/latest/user/quickstart/#passing-parameters-in-urls

params = {

"tab": "time",

"date": "1850"

}

# https://requests.readthedocs.io/en/latest/user/quickstart/#custom-headers

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/100.0.4896.60 Safari/537.36",

}

html = requests.get(f"https://artsandculture.google.com/category/artist", params=params, headers=headers, timeout=30)

soup = BeautifulSoup(html.text, "lxml")

author_results = []

all_script_tags = soup.select("script")

# https://regex101.com/r/4XAQ49/1

portion_of_script_tags = re.search("\[\"stella\.pr\",\"DatedAssets:.*\",\[\[\"stella\.common\.cobject\",(.*?)\[\]\]\]\;<\/script>", str(all_script_tags)).group(1)

# https://regex101.com/r/XXAbKH/1

authors = re.findall(r"\"((?!stella\.common\.cobject)\w.*?)\",\"\d+", str(portion_of_script_tags))

# https://regex101.com/r/K4K3iB/1

author_links = [f"https://artsandculture.google.com{link}" for link in re.findall("\"(/entity.*?)\"", str(portion_of_script_tags))]

# https://regex101.com/r/x6wwVJ/1

number_of_artworks = re.findall("\"(\d+).*?items\"", str(portion_of_script_tags))

for author, author_link, num_artworks in zip(authors, author_links, number_of_artworks):

author_results.append({

"author": author,

"author_link": author_link,

"number_of_artworks": num_artworks

})

print(json.dumps(author_results, indent=2, ensure_ascii=False))

Example output

[

{

"author": "Vincent van Gogh",

"author_link": "https://artsandculture.google.com/entity/vincent-van-gogh/m07_m2?categoryId\\u003dartist",

"number_of_artworks": "338"

},

{

"author": "Claude Monet",

"author_link": "https://artsandculture.google.com/entity/claude-monet/m01xnj?categoryId\\u003dartist",

"number_of_artworks": "275"

},

{

"author": "Paul Cézanne",

"author_link": "https://artsandculture.google.com/entity/paul-cézanne/m063mx?categoryId\\u003dartist",

"number_of_artworks": "301"

},

{

"author": "Paul Gauguin",

"author_link": "https://artsandculture.google.com/entity/paul-gauguin/m0h82x?categoryId\\u003dartist",

"number_of_artworks": "380"

},

# ...

]