I am working on seq2seq model, and I want to use the embedding layer given in Keras Blog Bonus FAQ. Here is my code, where num_encoder_tokens is 67 and num_decoder_tokens is 11.

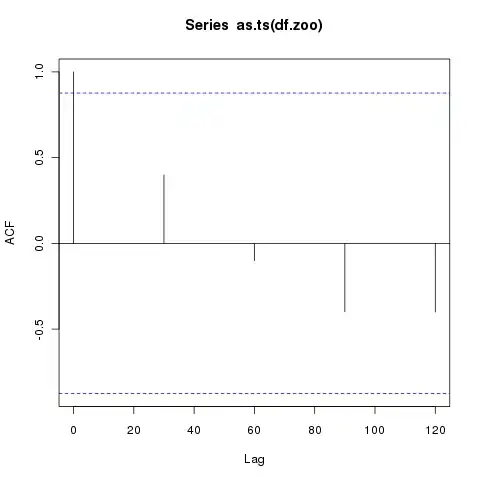

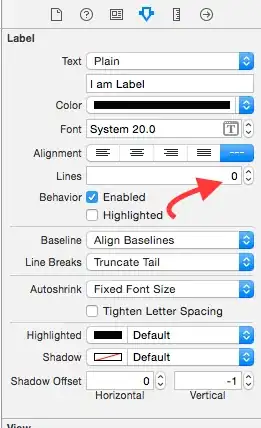

I am getting the issue shown in figure.

Can anyone help me with reshaping the output_shape of embedding layer or input shape of LSTM as I have no idea how to do it.