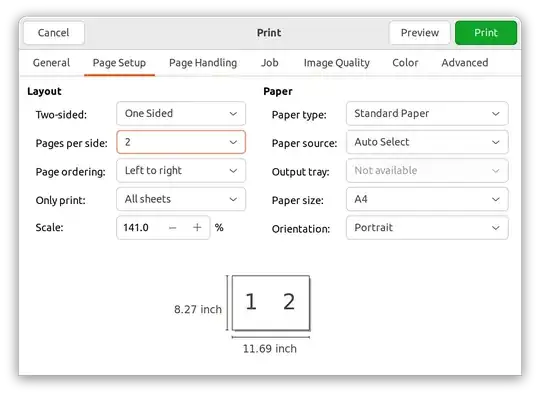

I am trying to correct image distortion with open CV. The theory for the distortion I am trying to correct is a combined barrel and pincushion distortion like this:

I am not working with a normal camera here, but with a galvanometer scanning system (like this: http://www.chinagalvo.com/Content/uploads/2019361893/201912161511117936592.gif), so I cannot just record a checkerboard pattern like all the OpenCV guides suggest.

But I can move the scanner to a target position and measure the actual position of the laser beam in the image plane, which plots e.g. to this:

So I put those values into OpenCV's calibrateCamera function in this script:

import numpy as np

import cv2

targetPosX = np.array([-4., -2., 0., 2., 4., -4., -2., 0., 2., 4., -4., -2., 2., 4., -4., -2., 0., 2., 4., -4., -2., 0., 2., 4.])

targetPosY = np.array([-4., -4., -4., -4., -4., -2., -2., -2., -2., -2., 0., 0., 0., 0., 2., 2., 2., 2., 2., 4., 4., 4., 4., 4.])

actualPosX = np.array([-4.21765834, -2.14708042, -0.07755157, 1.9910175, 4.05941744, -4.17816164, -2.10614537, -0.03775821, 2.02883123, 4.09875409, -4.13937186, -2.07079973, 2.07072068, 4.1377518, -4.10200901, -2.03229052, 0.0367603, 2.10655379, 4.17627114, -4.06449305, -1.99426964, 0.07737988, 2.14365487, 4.21625359])

actualPosY = np.array([-4.04808315, -4.08681247, -4.12545265, -4.16807799, -4.20657896, -1.98568911, -2.0217478, -2.06356789, -2.10326313, -2.14456442, 0.07567631, 0.03889721, -0.04043382, -0.08069954, 2.14054726, 2.09940048, 2.05965315, 2.02167639, 1.9800822, 4.20167787, 4.16215278, 4.12334605, 4.08099448, 4.04376011])

scale = 100 # px / mm

height = 9 * scale # range of measured points is -4 to 4mm --> show area from -4.5 to 4.5 with 100 px / mm

width = 9 * scale

def scale_and_shift(array, scl, shift):

array *= scl

array += shift

return array

# shift recorded positon into image coordinate system

targetPosX = scale_and_shift(targetPosX, scale, width / 2.)

targetPosY = scale_and_shift(targetPosY, scale, height / 2.)

actualPosX = scale_and_shift(actualPosX, scale, width / 2.)

actualPosY = scale_and_shift(actualPosY, scale, height / 2.)

# create images

target_image = np.full((height,width), 255)

combined_image = np.full((height,width), 255)

actual_image = np.full((height,width), 255)

for i in range(len(targetPosX)):

cv2.circle(target_image, (int(targetPosX[i]), int(targetPosY[i])), 20, 0, -1)

# circle in combined image is target position, full point is actual position

cv2.circle(combined_image, (int(targetPosX[i]), int(targetPosY[i])), 20, 0, 5)

cv2.circle(combined_image, (int(actualPosX[i]), int(actualPosY[i])), 20, 0, -1)

cv2.circle(actual_image, (int(actualPosX[i]), int(actualPosY[i])), 20, 0, -1)

cv2.imwrite("combined_before.png", combined_image)

# create point lists for calibrateCamera function. set 3rd dimension to zero.

targetPoints = np.array([np.vstack([targetPosX, targetPosY]).T]).astype("float32")

targetPoints_zero = np.array([np.vstack([targetPosX, targetPosY, list(np.zeros(len(targetPosX)))]).T]).astype("float32")

imagePoints = np.array([np.vstack([actualPosX, actualPosY]).T]).astype("float32")

imagePoints_zero = np.array([np.vstack([actualPosX, actualPosY, np.zeros(len(actualPosX))]).T]).astype("float32")

# read image to apply to

# saving and reading because just passing the actual_image somehow didn't work

cv2.imwrite("image.png", actual_image)

img = cv2.imread("image.png", cv2.IMREAD_GRAYSCALE)

h, w = img.shape[:2]

# calulate distortion matrix

ret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(targetPoints_zero, imagePoints, (h,w), None, None)

# refine distortion matrix to avoid cut-off

newcameramtx, roi = cv2.getOptimalNewCameraMatrix(mtx, dist, (w,h), 1, (w,h))

# undistort

dst = cv2.undistort(img, mtx, dist, None, newcameramtx)

cv2.imwrite('calibresult.png', dst)

cv2.imwrite("correction.png", dst - actual_image)

for i in range(len(targetPosX)):

# circle in combined image is target position, full point is actual position

cv2.circle(dst, (int(targetPosX[i]), int(targetPosY[i])), 20, 0, 5)

cv2.imwrite('combined_result.png', dst)

However, the result is not as expected - the corrected image does not line up with the target image (full dots - corrected actual points. Circles - target points):

Comparing before and after shows that just a minimal correction / distortion compensation was applied (just calculated the diff of before and after for the actual image:

Is there any way I can tweak the calibrateCamera? Or is this just the wrong tool for this job?