I want to fit my data to a specific function that has already been optimized using Matlab.

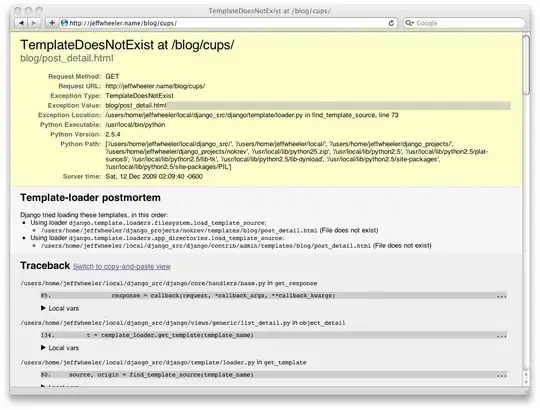

I get the following error:

'Warning message:

Computation failed in stat_smooth():

singular gradient '

Please help! Here is my R code:

tibble

x y SEM

1 1 0.0342 0.00532

2 3 0.0502 0.00639

3 5 0.0700 0.0118

4 10 0.123 0.0269

5 20 0.154 0.0125

6 30 0.203 0.0190

7 40 0.257 0.0255

8 50 0.287 0.0266

9 60 0.345 0.0347

10 90 0.442 0.0398

11 120 0.569 0.0570

12 180 0.726 0.0406

13 240 0.824 0.0150

14 360 0.868 0.00821

15 1440 0.890 0.0246

tibble %>%

ggplot(aes(x, y)) +

geom_point()+

geom_errorbar(aes(ymin=y-SEM, ymax=y+SEM), width=25)+

geom_ribbon(aes(ymin = y-2.575*SEM, ymax = y+2.575*SEM), alpha = 0.1)+

geom_smooth(method="nls",

formula= y ~ (1-((k2/(k2-k1))*exp(-k1*x))+((k1/(k2-k1))*exp(-k2*x))),

se=F,

method.args = list(start=list(k1=0.006999, k2=849.6)))