You can do in one line via several tricks.

1) Unique Index count in junction with casting as bool

Works in any case even when you do not have any other column besides index, columns and values. This code implies to count unique indices of each index-column intersection and returning 1 incase its more than 0 else 0.

df.reset_index().pivot_table(index=['cust_id','purchase_qtr'],

columns='purchase_item',

values='index',

aggfunc='nunique', fill_value=0)\

.astype(bool).astype(int)

2) Checking if any other column is not null

If you have other columns besides index, columns and values AND want to use them for intuition. Like purchase_date in your case. It is more intutive because you can "read" it like: Check per customer per quarter if the purchase date of the item is not null and parse them as integer.

df.pivot_table(index=['cust_id','purchase_qtr'],

columns='purchase_item',values='purchase_date',

aggfunc=lambda x: all(pd.notna(x)), fill_value=0)\

.astype(int)

3) Seeing len of elements falling in each index-column intersection

This seeslen of elements falling in each each index-column intersection and returning 1 incase its more than 0 else 0. Same intuitive approach:

df.pivot_table(index=['cust_id','purchase_qtr'],

columns='purchase_item',

values='purchase_date',

aggfunc=len, fill_value=0)\

.astype(bool).astype(int)

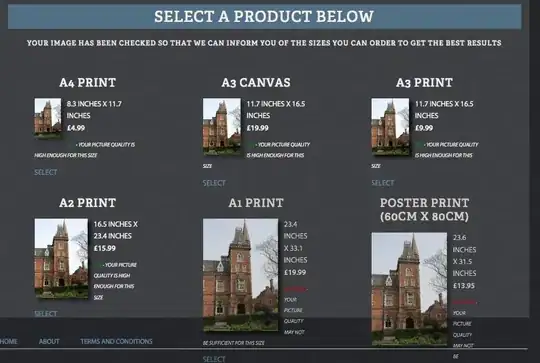

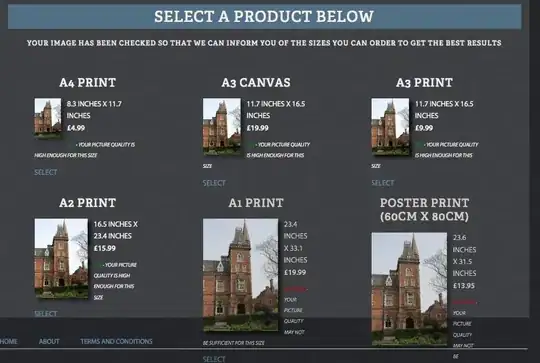

All return desired dataframe:

Note that you should be only using crosstab when you don't have a dataframe already as it calls pivot_table internally.