Of course it is "possible". There are several issues.

What is your requirement for the accuracy?

Are you willing to use higher order splines?

How much memory are you willing to spend on this? Linear function over small enough intervals will approximate the exponential function to any degree of accuracy needed, but it may require a VERY small interval.

Edit:

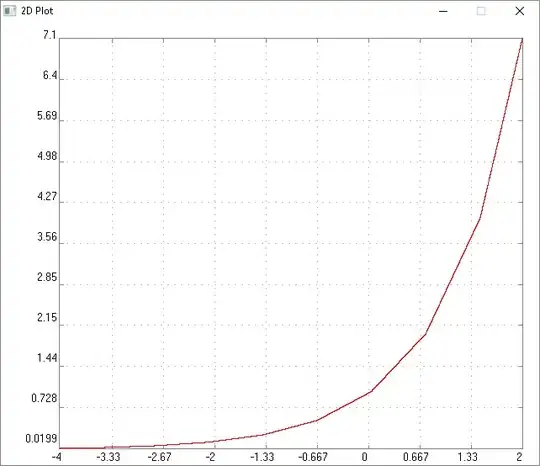

Given the additional information provided, I ran a quick test. Range reduction can always be used on the exponential function. Thus, if I wish to compute exp(x) for ANY x, then I can rewrite the problem in the form...

y = exp(xi + xf) = exp(xi)*exp(xf)

where xi is the integer part of x, and xf is the fractional part. The integer part is simple. Compute xi in binary form, then repeated squarings and multiplications allow you to compute exp(xi) in relatively few operations. (Other tricks, using powers of 2 and other intervals can give you yet more speed for the speed hungry.)

All that remains is now to compute exp(xf). Can we use a spline with linear segments to compute exp(xf), over the interval [0,1] with only 4 linear segments, to an accuracy of 0.005?

This last question is resolved by a function that I wrote a few years ago, that will approximate a function with a spline of a given order, to within a fixed tolerance on the maximum error. This code required 8 segments over the interval [0,1] to achieve the required tolerance with a piecewise linear spline function. If I chose to reduce the interval further to [0,0.5], I could now achieve the prescribed tolerance.

So the answer is simple. If you are willing to do the range reductions to reduce x to the interval [0.0.5], then do the appropriate computations, then yes you can achieve the requested accuracy with a linear spline in 4 segments.

In the end, you will always be better off using a hard coded exponential function though. All of the operations mentioned above will surely be slower than what your compiler will provide, IF exp(x) is available.