I'm trying to render a model of a hand in a 3D space based on the positions given by

the XrHandJointLocationEXT array from the XR_EXT_hand_tracking extension.

I am using both GTLF hand models from Valve, which have the correct amount of bones

to match the joints defined by the OpenXR specification in the XrHandJointEXT enum.

I do my rendering as follows :

Every frame I update each joint independently by multiplying its current transform

with the inverse bind matrice retrieved from the GLTF model. The XrPosef is relative to the center of the 3D space. I am using cglm to handle all the matrice calculations.

for (size_t i = 0; i < XR_HAND_JOINT_COUNT_EXT; ++i) {

const XrHandJointLocationEXT *joint = &locations->jointLocations[i];

const XrPosef *pose = &joint->pose;

glm_mat4_identity(model->bones[i]);

vec3 position;

wxrc_xr_vector3f_to_cglm(&pose->position, position);

glm_translate(model->bones[i], position);

versor orientation;

wxrc_xr_quaternion_to_cglm(&pose->orientation, orientation);

glm_quat_rotate(model->bones[i], orientation, model->bones[i]);

glm_mat4_mul(model->bones[i], model->inv_bind[i], model->bones[i]);

}

Then the bones array is uploaded to the vertex shader, along with the view-proj

matrix, computed for each eye of the HMD.

#version 320 es"

uniform mat4 vp;

uniform mat4 bones[26];

layout (location = 0) in vec3 pos;

layout (location = 1) in vec2 tex_coord;

layout (location = 2) in uvec4 joint;

layout (location = 3) in vec4 weight;

out vec2 vert_tex_coord;

void main() {

mat4 skin =

bones[joint.x] * weight.x +

bones[joint.y] * weight.y +

bones[joint.z] * weight.z +

bones[joint.w] * weight.w;

gl_Position = vp * skin * vec4(pos, 1.0);

vert_tex_coord = tex_coord;

}

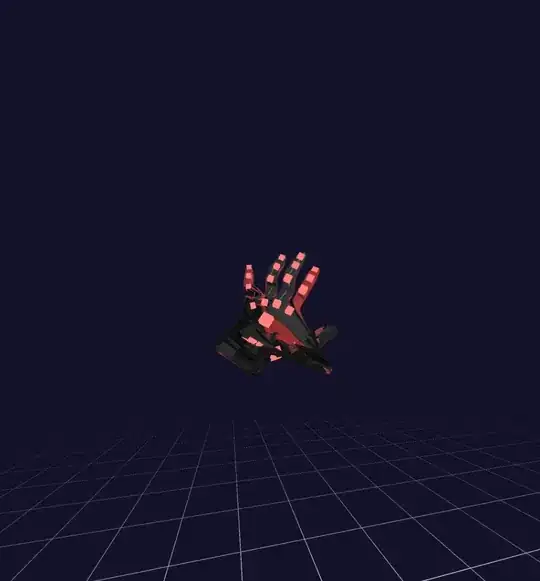

Is the method of calculation correct? I get decent but "glitchy" results. As you can see on the following screenshot, i rendered both independant joints on top of the hand model, and you can see a glitch on the thumb.

Should I take account of the parent bone when computing my bone transform?