I am working on an application which requires to Fourier Transform batches of 2-dimensional signals, stored using single-precision complex floats.

I wanted to test the idea of dissecting those signals into smaller ones and see whether I can improve the efficiency of my computation, considering that FLOPS in FFT operations grow in an O(Nlog(N)) fashion. Of course different signal sizes (in memory) may experience difference FLOPS/s performance, so in order to really see if this idea can work I made some experiments.

What I observed after doing the experiments was that performance was varying very abruptly when changing the signal size, jumping for example from 60 Gflops/s to 300 Gflops/s! I am wondering why is that the case.

I ran the experiments using:

Compiler: g++ 9.3.0 ( -Ofast )

Intel MKL 2020 (static linking)

MKL-threading: GNU

OpenMP environment:

export OMP_PROC_BIND=close

export OMP_PLACES=cores

export OMP_NUM_THREADS=20

Platform:

Intel Xeon Gold 6248

Profiling tool:

Score-P 6.0

Performance results:

To estimate the average FLOP rates I assume: # of Flops = Nbatch * 5*N*N*Log_2( N*N )

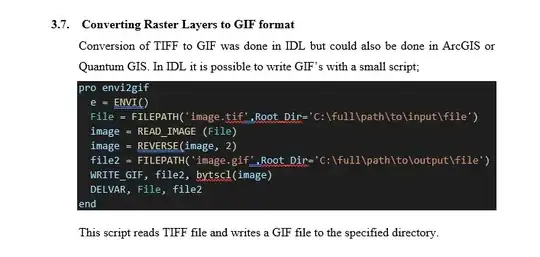

When using batches of 2D signals of size 201 x 201 elements (N = 201), the observed average performance was approximately 72 Gflops/s.

Then, I examined the performance using 2D signals with N = 101, 102, 103, 104 or 105. The performance results are shown on the figure below.

I also examined experiments with smaller size such as N = 51, 52, 53, 54 or 55. The results are again shown below.

An finally, for N = 26, 27, 28, 29 or 30.

I performed the experiments two times and the performance results are consistent! I really doubt it is noise... but again I feel is quite unrealistic to achieve 350 Gflops/s, or maybe not???

Has anyone experienced similar performance variations, or have some comments on this?