I can suggest a way to reduce your equation to an integral equation, which can be solved numerically by approximating its kernel with a matrix, thereby reducing the integration to matrix multiplication.

First, it is clear that the equation can be integrated twice over x, first from 1 to x, and then from 0 to x, so that:

We can now discretize this equation, putting it on a equidistant grid:

Here, the A[x] becomes a vector, and the integrated kernel iniIntK becomes a matrix, while integration is replaced by a matrix multiplication. The problem is then reduced to a system of linear equations.

The easiest case (that I will consider here) is when the kernel iniIntK can be derived analytically - in this case this method will be quite fast. Here is the function to produce the integrated kernel as a pure function:

Clear[computeDoubleIntK]

computeDoubleIntK[kernelF_] :=

Block[{x, x1},

Function[

Evaluate[

Integrate[

Integrate[kernelF[y, x1], {y, 1, x}] /. x -> y, {y, 0, x}] /.

{x -> #1, x1 -> #2}]]];

In our case:

In[99]:= K[x_,x1_]:=1;

In[100]:= kernel = computeDoubleIntK[K]

Out[100]= -#1+#1^2/2&

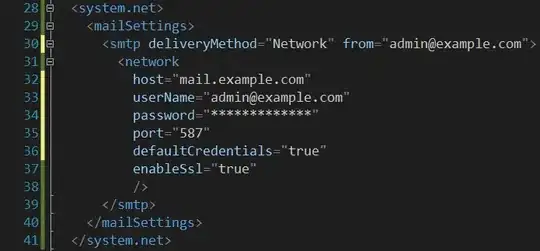

Here is the function to produce the kernel matrix and the r.h,s vector:

computeDiscreteKernelMatrixAndRHS[intkernel_, a0_, aprime1_ ,

delta_, interval : {_, _}] :=

Module[{grid, rhs, matrix},

grid = Range[Sequence @@ interval, delta];

rhs = a0 + aprime1*grid; (* constant plus a linear term *)

matrix =

IdentityMatrix[Length[grid]] - delta*Outer[intkernel, grid, grid];

{matrix, rhs}]

To give a very rough idea how this may look like (I use here delta = 1/2):

In[101]:= computeDiscreteKernelMatrixAndRHS[kernel,0,1,1/2,{0,1}]

Out[101]= {{{1,0,0},{3/16,19/16,3/16},{1/4,1/4,5/4}},{0,1/2,1}}

We now need to solve the linear equation, and interpolate the result, which is done by the following function:

Clear[computeSolution];

computeSolution[intkernel_, a0_, aprime1_ , delta_, interval : {_, _}] :=

With[{grid = Range[Sequence @@ interval, delta]},

Interpolation@Transpose[{

grid,

LinearSolve @@

computeDiscreteKernelMatrixAndRHS[intkernel, a0, aprime1, delta,interval]

}]]

Here I will call it with a delta = 0.1:

In[90]:= solA = computeSolution[kernel,0,1,0.1,{0,1}]

Out[90]= InterpolatingFunction[{{0.,1.}},<>]

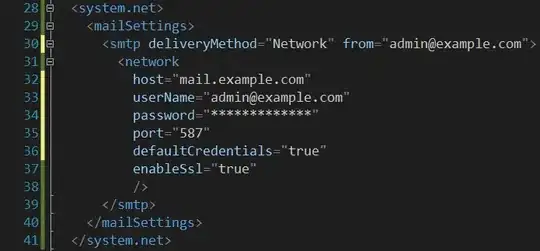

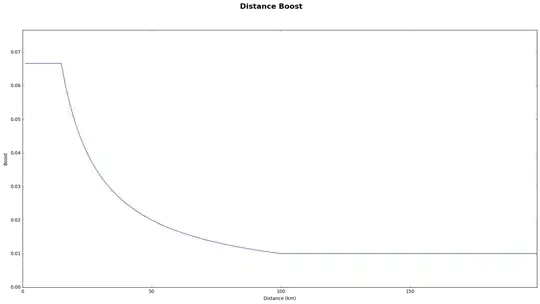

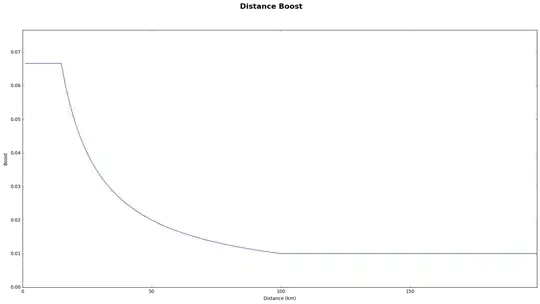

We now plot the result vs. the exact analytical solution found by @Sasha, as well as the error:

I intentionally chose delta large enough so the errors are visible. If you chose delta say 0.01, the plots will be visually identical. Of course, the price of taking smaller delta is the need to produce and solve larger matrices.

For kernels that can be obtained analytically, the main bottleneck will be in the LinearSolve, but in practice it is pretty fast (for matrices not too large). When kernels can not be integrated analytically, the main bottleneck will be in computing the kernel in many points (matrix creation. The matrix inverse has a larger asymptotic complexity, but this will start play a role for really large matrices - which are not necessary in this approach, since it can be combined with an iterative one - see below). You will typically define:

intK[x_?NumericQ, x1_?NumericQ] := NIntegrate[K[y, x1], {y, 1, x}]

intIntK[x_?NumericQ, x1_?NumericQ] := NIntegrate[intK[z, x1], {z, 0, x}]

As a way to speed it up in such cases, you can precompute the kernel intK on a grid and then interpolate, and the same for intIntK. This will however introduce additional errors, which you'll have to estimate (account for).

The grid itself needs not be equidistant (I just used it for simplicity), but may (and probably should) be adaptive, and generally non-uniform.

As a final illustration, consider an equation with a non-trivial but symbolically integrable kernel:

In[146]:= sinkern = computeDoubleIntK[50*Sin[Pi/2*(#1-#2)]&]

Out[146]= (100 (2 Sin[1/2 \[Pi] (-#1+#2)]+Sin[(\[Pi] #2)/2]

(-2+\[Pi] #1)))/\[Pi]^2&

In[157]:= solSin = computeSolution[sinkern,0,1,0.01,{0,1}]

Out[157]= InterpolatingFunction[{{0.,1.}},<>]

Here are some checks:

In[163]:= Chop[{solSin[0],solSin'[1]}]

Out[163]= {0,1.}

In[153]:=

diff[x_?NumericQ]:=

solSin''[x] - NIntegrate[50*Sin[Pi/2*(#1-#2)]&[x,x1]*solSin[x1],{x1,0,1}];

In[162]:= diff/@Range[0,1,0.1]

Out[162]= {-0.0675775,-0.0654974,-0.0632056,-0.0593575,-0.0540479,-0.0474074,

-0.0395995,-0.0308166,-0.0212749,-0.0112093,0.000369261}

To conclude, I just want to stress that one has to perform a careful error - estimation analysis for this method, which I did not do.

EDIT

You can also use this method to get the initial approximate solution, and then iteratively improve it using FixedPoint or other means - in this way you will have a relatively fast convergence and will be able to reach the required precision without the need to construct and solve huge matrices.