I'm using S3 Batch Operations to invoke a lambda to load a bunch of data into elasticsearch. Reserved concurrency is set to 1 on the lambda while experimenting with the right amount of concurrency.

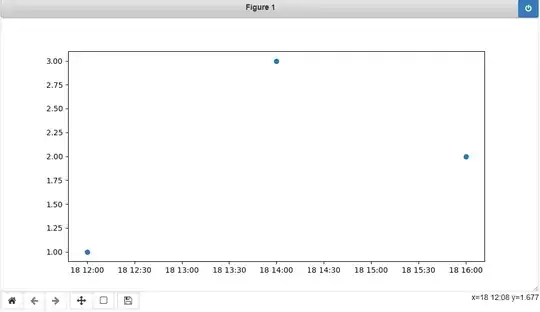

Strangely when testing I see S3 calls the lambda a few times back-to-back, and then makes no more invocations for about 5 minutes. For example:

Any ideas how to avoid this delay? There are no other batch operations happening on the account and priority is set to "10". Maybe it has something to do with the reserved concurrency value? Although according to the documentation:

When the job runs, Amazon S3 starts multiple function instances to process the Amazon S3 objects in parallel, up to the concurrency limit of the function. Amazon S3 limits the initial ramp-up of instances to avoid excess cost for smaller jobs.

Thank you