The final result is sorted on column 'timestamp'. I have two scripts which only differ in one value provided to the column 'record_status' ('old' vs. 'older'). As data is sorted on column 'timestamp', the resulting order should be identic. However, the order is different. It looks like, in the first case, the sort is performed before the union, while it's placed after it.

Using orderBy instead of sort doesn't make any difference.

Why is it happening and how to prevent it? (I use Spark 3.0.2)

Script1 (full) - result after 4 runs (builds):

from transforms.api import transform, Output, incremental

from pyspark.sql import functions as F, types as T

@incremental(

require_incremental=True,

)

@transform(

out=Output("ri.foundry.main.dataset.a82be5aa-81f7-45cf-8c59-05912c8ed6c7"),

)

def compute(out, ctx):

out_schema = T.StructType([

T.StructField('c1', T.StringType()),

T.StructField('timestamp', T.TimestampType()),

T.StructField('record_status', T.StringType()),

])

df_out = (

out.dataframe('previous', out_schema)

.withColumn('record_status', F.lit('older'))

)

df_upd = (

ctx.spark_session.createDataFrame([('1',)], ['c1'])

.withColumn('timestamp', F.current_timestamp())

.withColumn('record_status', F.lit('new'))

)

df = df_out.unionByName(df_upd)

df = df.sort('timestamp', ascending=False)

out.set_mode('replace')

out.write_dataframe(df)

Script2 (full) - result after 4 runs (builds):

from transforms.api import transform, Output, incremental

from pyspark.sql import functions as F, types as T

@incremental(

require_incremental=True,

)

@transform(

out=Output("ri.foundry.main.dataset.caee8f7a-64b0-4837-b4f3-d5a6d5dedd85"),

)

def compute(out, ctx):

out_schema = T.StructType([

T.StructField('c1', T.StringType()),

T.StructField('timestamp', T.TimestampType()),

T.StructField('record_status', T.StringType()),

])

df_out = (

out.dataframe('previous', out_schema)

.withColumn('record_status', F.lit('old'))

)

df_upd = (

ctx.spark_session.createDataFrame([('1',)], ['c1'])

.withColumn('timestamp', F.current_timestamp())

.withColumn('record_status', F.lit('new'))

)

df = df_out.unionByName(df_upd)

df = df.sort('timestamp', ascending=False)

out.set_mode('replace')

out.write_dataframe(df)

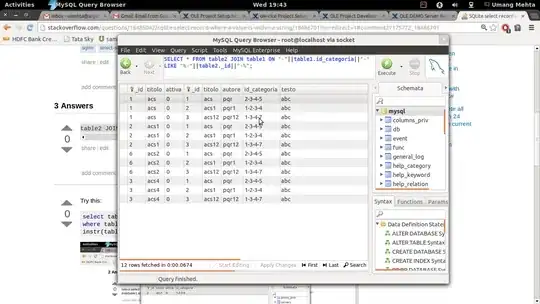

Query plans in both transformations show that sorting must be performed after the union (inspecting logical and physical plans I see no differences except for IDs and RIDs, but all the transformation steps are in the same place):

Observation:

Using the following profile the sort works well (query plans don't change):

@configure(["KUBERNETES_NO_EXECUTORS_SMALL"])