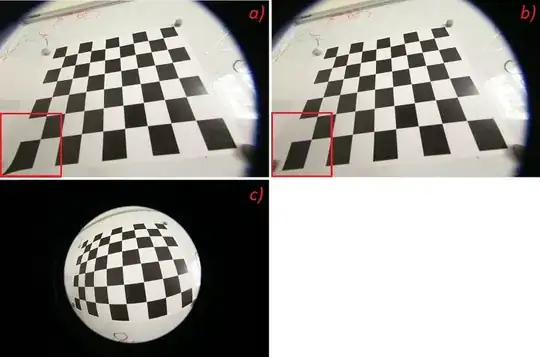

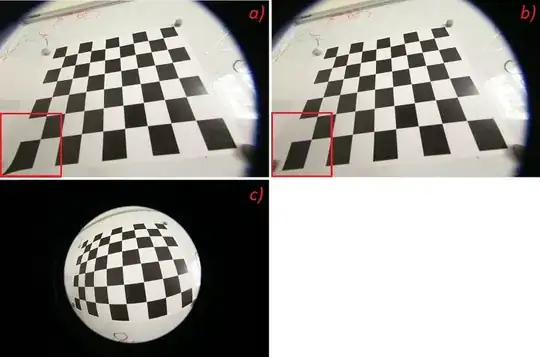

The image seems just wide-angle, not fisheye. Images from fisheye camera usually have black circle borders, and they look like seeing through a round hole. See picture c) below (from OpenCV doc):

The normal method of distinguishing wide-angle from fisheye is to check the FOV angle.

Given the camera intrinsic parameters (the cameraMatrix and distCoeffs, from calibration routine), you can calculate a new camera intrinsic matrix with the largest FOV and no distortion by calling getOptimalNewCameraMatrix(). Then the FOV angle in x-direction (it's usually larger than y-direction) is arctan(cx/fx)+arctan((width-cx)/fx), where fx is the focal length in x-direction, cx is the x-coordinate of principal point, and width is the image width.

In my experience, when FOV<80°, the Brown distortion model (k1, k2, k3, p1, p2) should be used. When 80°<FOV<140°, the Rational model (k1~k6, p1, p2) should be used. And when 140°<FOV<170°, the Fisheye model (k1, k2, k3, k4) should be used. More complex model (with more parameters) has better fitting ability, but also makes the calibration harder.

The Fisheye model performs better than Rational model in very large FOV cases, because it has higher order radial parameter. But when FOV angle is not very large, Rational model is a better choice, because it has tangential parameters.

The undistorted picture you provided seems fairly good. But if you care more about the precision, you should check the reprojection error, epipolar error (multi-camera), and even collineation error of the key points (checkerboard corners, circle centroids, or whatever features depending on your calibration pattern) in calibration procedure.