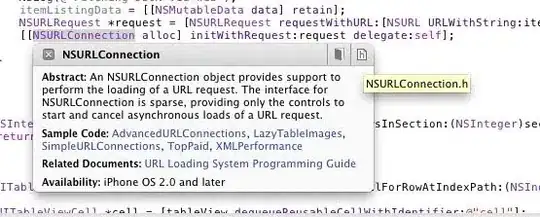

I am using Airflow 2.1.4, the problem is my task randomly received SIGTERM. But when I check the log from email alert from first image.

log only showing something like in the second image, and my task failed, and I am sure I already set retries to 2 (in the default args of DAG which is each task will have value of retries is 2), but why the task immediately failed in the first attempt, and why the log looks like this.

And it is just randomly happened, sometimes work fine, sometimes not.

Environment : Airflow 2.1.4 (upgrade from 2.1.2) Using Rabbitmq, and mysql Worker = 3