Firstly - I am so lost, I don't even know how to phrase / title my question.

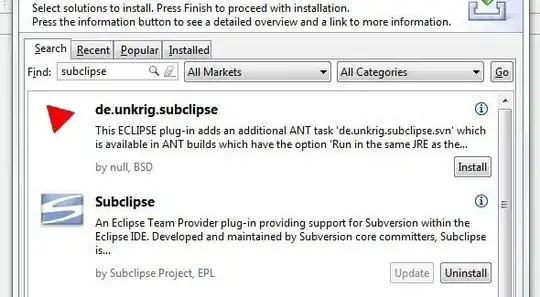

My first dataframe was key value pairs, which I pivoted out with some simple code and it gave me the dataframe which you can see in image 1:

df = df.groupBy("ID", "SUBID").pivot("SOURCE", fields).agg(first(F.col("VALUE")))

It sort of has what I want, but I think I need to groupby again or merge or something to get my desired result, image 2..

I have tried a few things, but none seem to work. The only one which makes sense to me is I would either need another group by or a self join of some sort?

The result I would like (As I know its all for the same person [ID]) would be something like the below... Same Name has been applied across the board, same surname, same Town etc. Cells that contain values would retain their current values.

Looking for any advice or duplicate questions please.