I have a sequece labeling task.

So as input, I have a sequence of elements with shape [batch_size, sequence_length] and where each element of this sequence should be assigned with some class.

And as a loss function during training a neural net, I use a Cross-entropy.

How should I correctly use it?

My variable target_predictions has shape [batch_size, sequence_length, number_of_classes] and target has shape [batch_size, sequence_length].

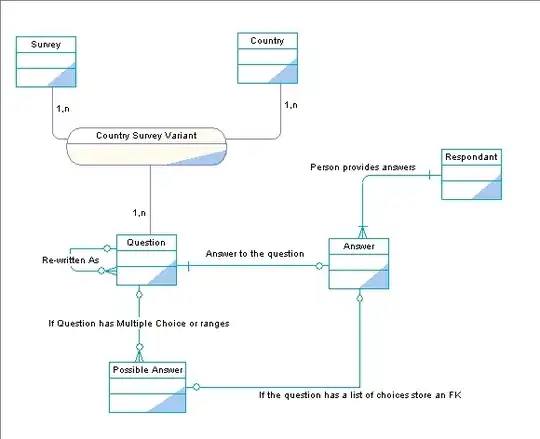

Documentation says:

I know if I use CrossEntropyLoss(target_predictions.permute(0, 2, 1), target), everything will work fine. But I have concerns that torch is intepreting my sequence_length as d_1 variable as on screenshot and will think that it is a multidimential loss, which is not the case.

How should I correctly do it?