Rephrased at the end

NodeJS communicates with other APIs through GRPC.

Each external API has its own dedicated GRPC connection with Node and every dedicated GRPC connection has an upper bound of concurrent clients that it can serve simultaneously (e.g. External API 1 has an upper bound of 30 users).

Every request to the Express API, may need to communicate with External API 1, External API 2, or External API 3 (from now on, EAP1, EAP2 etc) and the Express API also has an upper bound of concurrent clients (e.g. 100 clients) that can feed the EAPs with.

So, how I am thinking of solving the issue:

A Client makes a new request to the Express API.

A middleware, queueManager, creates a Ticket for the client (think of it as a Ticket that approves access to the System - it has basic data of the Client (e.g. name))

The Client gets the Ticket, creates an Event Listener that listens to an event with their Ticket ID as the event name (when the System is ready to accept a Ticket, it yields the Ticket's ID as an event) and enters a "Lobby" where, the Client, just waits till their ticket ID is accepted/announced (event).

My issue is that I can't really think of how to implement the way that the system will keep track of the tickets and how to have a queue based on the concurrent clients of the system.

Before the client is granted access to the System, the System itself should:

- Check if the Express API has reached its upper-bound of concurrent clients -> If that's true, it should just wait till a new Ticket position is available

- If a new position is available, it should check the Ticket and find out which API it needs to contact. If, for example, it needs to contact EAP1, it should check how many current clients use the GRPC connection. This is already implemented (Every External API is under a Class that has all the information that is needed). If the EAP1 has reached its upper-bound, then NodeJS should try again later (But, how much later? Should I emit a system event after the System has completed another request to EAP1?)

I'm aware of Bull, but I am not really sure if it fits my requirements. What I really need to do is to have the Clients in a queue, and:

- Check if Express API has reached its upper-bound of concurrent users

- If a position is free, pop() a Ticket from the Ticket's array

- Check if the EAPx has reached its upper-bound limit of concurrent users

- If true, try another ticket (if available) that needs to communicate with a different EAP

- If false, grant access

Edit: One more idea could be to have two Bull Queues. One for the Express API (where the option "concurrency" could be set as the upper bound of the Express API) and one for the EAPs. Each EAP Queue will have a distinct worker (in order to set the upper bound limits).

REPHRASED

In order to be more descriptive about the issue, I'll try to rephrase the needs.

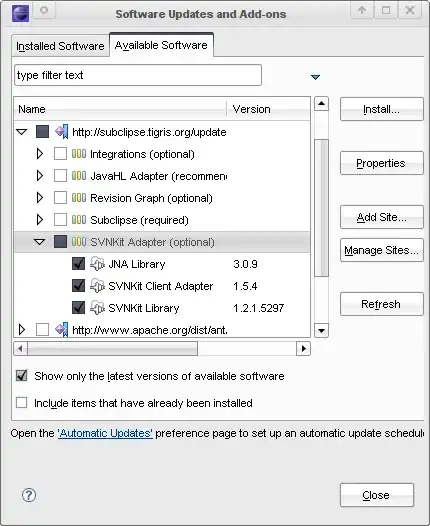

A simple view of the System could be:

I have used Clem's suggestion (RabbitMQ), but again, I can't achieve concurrency with limits (upper-bounds).

So,

- Client asks for a Ticket from the

TicketHandler. In order for the TicketHandler to construct a new Ticket, the client, along with other information, provides acallback:

TicketHandler.getTicket(variousInfo, function () {

next();

})

The callback will be used by the system to allow a Client to connect with an EAP.

TickerHandler gets the ticket:

i) Adds it to the queue

ii) When the ticket can be accessed (upper-bound is not reached), it asks the appropriate EAP Handler if the client can make use of the GRPC connection. If yes, then asks the EAP Handler to lock a position and then it calls the ticket's available callback (from Step 1) If no, TicketHandler checks the next available Ticket that needs to contact a different EAP. This should go on until the EAP Handler that first informed TicketHandler that "No position is available", sends a message to TicketHandler in order to inform it that "Now there are X available positions" (or "1 available position"). Then TicketHandler, should check the ticket that couldn't access EAPx before and ask again EAPx if it can access the GRPC connection.