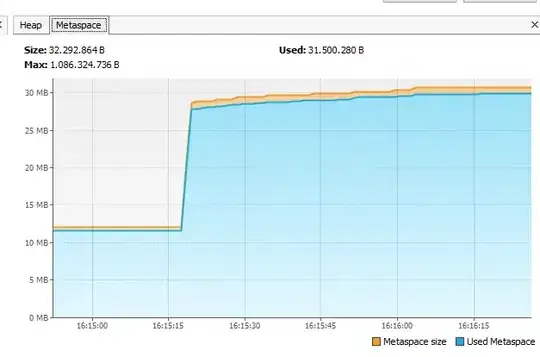

I have trained a tensorflow.keras model over the night and was suprised about the training process (please see the attached picture). Can anyone tell me, what can produce such an effect during training? I have trained with mse (right) and one other loss displayed (binary crossentropy). I have trained an autoencoder with 'normal' samples. The validation samples are 'anomaly' samples.

If you need more information, please let me know.

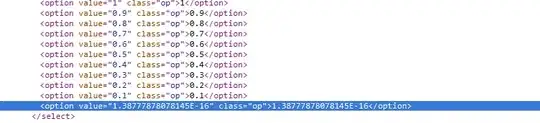

Edit: I might found the reason, but I am not sure: I have features as input-data, which do not have values strictly in [0,1], actually I have nearly all values in [0,1] but a few values a little bit bigger than 1. As I am training with the MSE, I thought this should not be a problem, but as reference I also use the binary crossentropy loss (needs values in [0,1]). This might cause some iritation to the training. I am using:

loss = tensorflow.keras.losses.MeanSquaredError(name='MeanSquaredError')

autoencoder.compile(optimizer=tensorflow.keras.optimizers.Adam(lr=learning_rate), loss=loss, metrics=[tensorflow.keras.metrics.BinaryCrossentropy()])

and livelossplot:

callbacks=[PlotLossesKeras(outputs=[MatplotlibPlot(figpath=save_string_plot)]),TensorBoard(log_dir="autoencoder\\" + string_name_model)],

At the moment I am retraining the model with feature values strictly in [0,1].

--> The retraining got to epoch 175 and then crashed, so i think this was not the solution (adapt feature strictly into [0,1]). Let us try the second possible solution (gradient clipping). Stay tuned. :)

Edit: One other solution might be gradient clipping, see:

--> Also this training did not work out. Loss explodes also at 175 epochs.

New pictures with the two possible solutions, which did not work out (but both exploded nearly at the same position):

Edit: I also tried batch normalization to avoid the loss explosion, but also this try did not work out.