I have an 8-nodes Spark standalone cluster with a total of 880 GB RAM and 224 cores.

I just can't explain why the Shuffle Read Blocked Time is so long : about 20 minutes per task. Do you have an idea why ? What are the bottleneck in such case ?

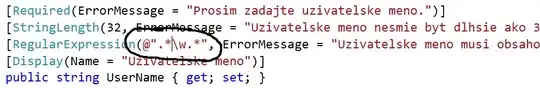

To give more details, you can see bellow the details for stage :

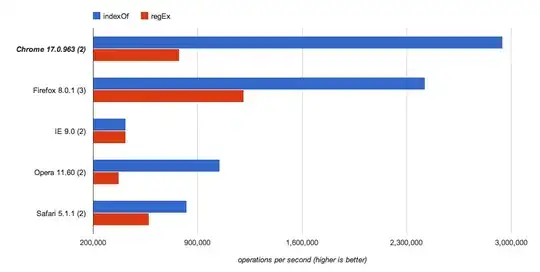

The following tasks metrics from Spark Ui bellow :

The agregation per executor bellow :

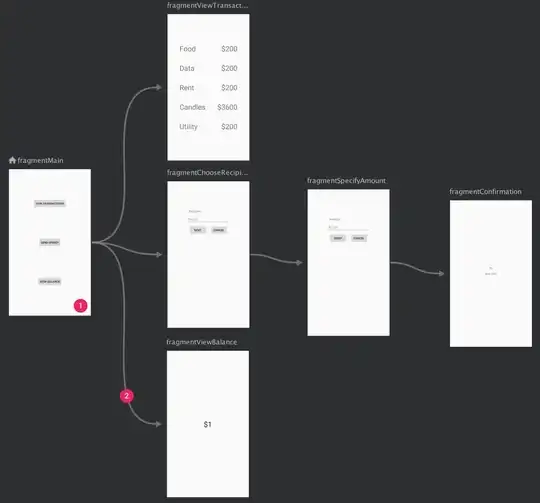

And the stage full DAG :

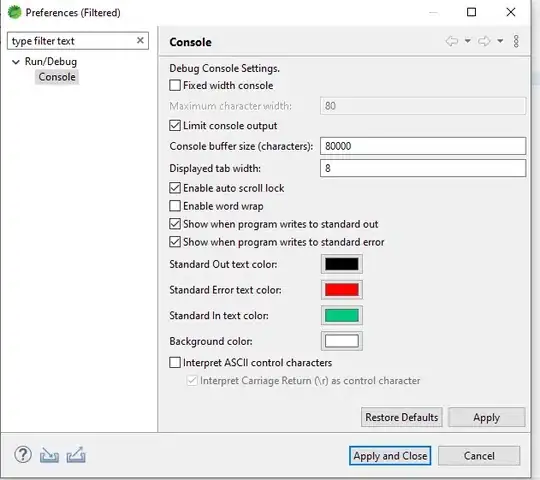

The executor tab :

The list of stages :

Thank you!