Couple of hands-on pocs with quarkus including resteasy reactive,kafka streams,kafka producers etc. were done to evaulate performance.

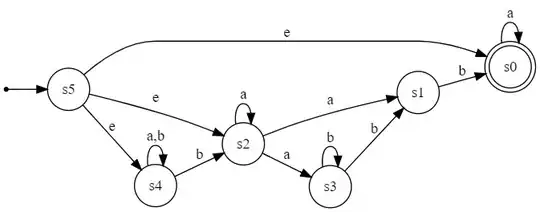

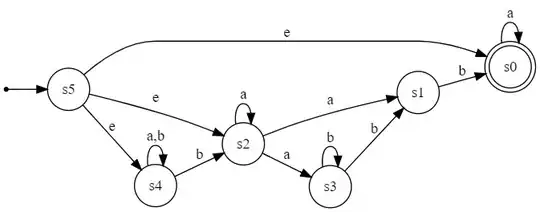

Let us talk about the biggest fundamental difference as there are many. Quarkus is not a mere framework approach. It is a platform approach to solving problems in a reactive fashion. Now let us look at a trusted public benchmark site i.e. -> https://www.techempower.com/benchmarks/ . Let us compare a Reactive Spring based JDBC application vs a Reactive Quarkus based application. Both use postgres db.

Note: Higher the number the better.

There is a 10x difference inspite of being both reactive.

Why? Let us deep dive into details.

As i told quarkus is not a just a mere framework approach. To make sure you get performance and memory footprint to likes of golang, all famous db drivers are vertically integrated with vertx event loop threadpool (that is what quarkus uses underneath).

Basically the whole async definition is rewritten by quarkus by

essentially not using any other hidden threadpool. Everything

vertically integrates with vertx event pool.

Other frameworks/libs which simply handover the request to another threadpool will never be able to achieve similar performance and memory utilization.

Remember in java it is incredibly important not to use another threadpool/thread as the minimum stack size is 1 mb for each thread and there is a negative performance overhead when context switching.

Also, remember distributive computing frameworks like Apache Spark, flink etc use only 2x amount of threads where x is number of cpu cores alloted in your application. Even the Java Fork/Join api works in the same fashion.

This means instead of using ton of threads, quarkus brings same

performance by sticking to strong fundamentals of cores to threads

ratio.

The second biggest difference that

all annotations in quarkus do not use reflections which have a bad

reputation for performance like spring especially in native mode with

graalvm.

Please refer: https://dzone.com/articles/think-twice-before-using-reflection to understand why ?

The third fundamental difference is incredible startup time and memory

overhead in quarkus for native mode compared to spring in native mode.

Why is that? What is happening underneath?

The central idea behind Quarkus is to do at build-time what traditional frameworks do at runtime: configuration parsing, classpath scanning, feature toggle based on classloading, and so on.

Quarkus Native application only contains the classes used at runtime out of ton of classes mentioned in your pom file. How and Why?

Process in Quarkus:

In traditional frameworks, all the classes required to perform the initial application deployment hang around for the application’s life, even though they are only used once. With Quarkus, they are not even loaded into the production JVM!

During the build-time processing, it prepares the initialization of all components used by your application.

Consequence:

It results in less memory usage and faster startup time as all metadata processing has already been done.

Refer: https://quarkus.io/vision/container-first for more in depth information how native builds in quarkus are way ahead in terms to spring native.

Kudos to Quarkus for redefining the future of java.

So yes obviously , QUARKUS is the way forward.

Still not convinced on why to use quarkus? Any queries? Comment here! if want to have a detailed discussion head over to the zulip channel mentioned below.

Kudos to Clement Escoffier (https://github.com/cescoffier) and Georgios Andrianakis(https://github.com/geoand) for explaining clearly the fundamentals of quarkus.

Also, Head over to https://quarkusio.zulipchat.com/login/ if you are looking to learn quarkus and fundamentally change the operating cost and performance of your existing and future applications.

References for Quarkus:

https://www.youtube.com/channel/UCaW8QG_QoIk_FnjLgr5eOqg

https://developers.redhat.com/courses/quarkus -> (These are free and do not even require registration)

References for POCs done on quarkus:

https://github.com/JayGhiya/QuarkusExperiments/tree/initial_version_v1

Insights from POCS: 5x-8x lower memory footprint and higher performance to their traditional java counterparts and 30%-50% less code required.