--UPDATE--Issue sorted out following the link in the comments from another post

Im new to ADF and even though I have created some simple pipelines before, this one is proving very tricky..

I have a Fileshare with files and pictures from jobs with the naming convention: [A or B for before/after]-[Site in numbers]-[Work number].[jpeg or jpeg]

I want to select only the pictures from the file share and copy them to my blob storage and I want to create a folder dynamically in the blob, for example taking the [work number] of the picture name, creating a folder with this number, and saving in that folder any pictures with that same work number.

I have successfully connected to my file share and blob and I have successfully created my datasets as binary and moved pictures across by typing the path and the file name in the copy activity, so the connectivity is there.

The issue is that there are roughly 1 million pics and I want to automate this process with wildcards, but Im having a hard time with the dynamic expressions in ADF... any help with extracting and manipulating the name of each picture to achieve something like this would be appreciated!

--UPDATE WITH IMAGES AND CLARIFICATION--

Im trying to dynamically create and fill folders with a pipeline. My data set is a list of pictures with the numbering system:

[A or B for before/after]-[Site in numbers]-[Work number].[jpeg]

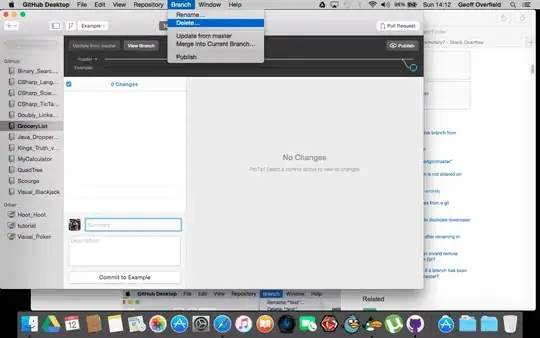

I created a working pipeline like this, getting the metadata of the source folder

For each filename using the childItems argument from the GetMetadata activity, I create a ForEach activity

I created two variables in the pipeline to set the folder name and change the order of the information in the filename. Then item().name is the iterative item of the ForEach activity

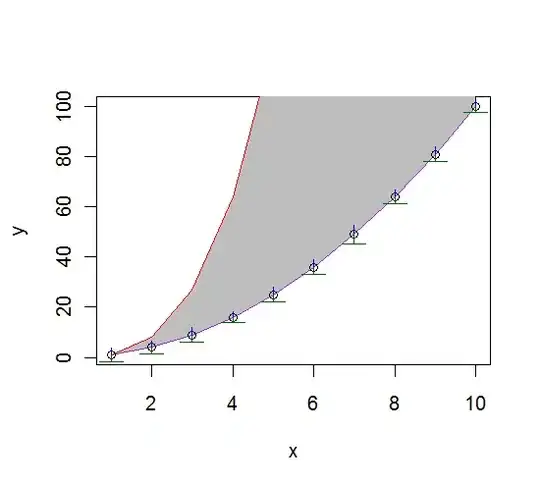

Up until this point everything is working great. The issue is that the copy activity is over writing every newly created folder and file until Im left with one folder and file.

As seen on the picture below the data is being successfully copied, just overwritten. I will have 4-8 pictures per work number, so ideally there should be several folders of different work numbers and inside each folder, pictures with the images associated. Any help on how to avoid this overwriting issue is greatly appreciated