I want to measure the deviation of the angle of an ArUco marker to a plane defined by a second reference ArUco marker.

A reference ArUco marker (M1) is fixed against a flat wall and a second ArUco marker (M2) is a few centimeters in front of that same wall. I want to know when the marker M2 is deviating more than 10 degrees from the xy plane of M1.

Here is an illustration of the configuration:

To do so, I thaught I should calculate the relative rotation between the pose rvec as explained in this post:

that was proposing the following code:

def inversePerspective(rvec, tvec):

""" Applies perspective transform for given rvec and tvec. """

R, _ = cv2.Rodrigues(rvec)

R = np.matrix(R).T

invTvec = np.dot(R, np.matrix(-tvec))

invRvec, _ = cv2.Rodrigues(R)

return invRvec, invTvec

def relativePosition(rvec1, tvec1, rvec2, tvec2):

""" Get relative position for rvec2 & tvec2. Compose the returned rvec & tvec to use composeRT

with rvec2 & tvec2 """

rvec1, tvec1 = rvec1.reshape((3, 1)), tvec1.reshape((3, 1))

rvec2, tvec2 = rvec2.reshape((3, 1)), tvec2.reshape((3, 1))

# Inverse the second marker, the right one in the image

invRvec, invTvec = inversePerspective(rvec2, tvec2)

info = cv2.composeRT(rvec1, tvec1, invRvec, invTvec)

composedRvec, composedTvec = info[0], info[1]

composedRvec = composedRvec.reshape((3, 1))

composedTvec = composedTvec.reshape((3, 1))

return composedRvec, composedTvec

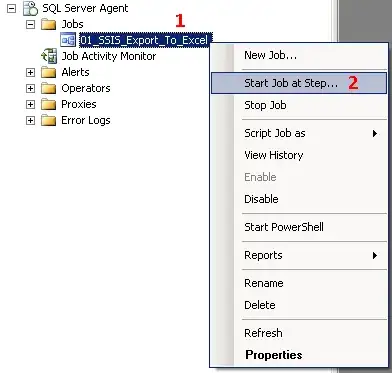

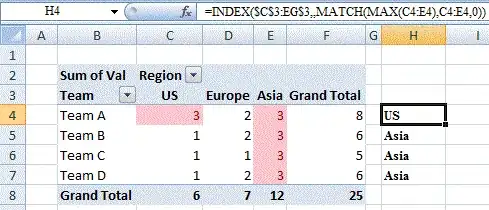

Computing the composedRvec, I get the following results :

With both ArUco markers in the same plane (composedRvec values in the top right corner) :

With both ArUco markers at a 90 degrees angle:

I do not really understand the results:

Ok for with the 0,0,0 composedRvec when markers in the same plane.

But why 0,1.78,0 in the second case?

What general condition should I have on the resulting composedRvec to tell me when the angle between the 2 markers is above 10 degrees?

Am I even following the right strategy with the composedRvec?

**** EDIT ***

Results of the 2 markers in the same xy plane with a 40° angle:

||composedRvec||= sqrt(0.619^2+0.529^2+0.711^2)=1.08 rad = 61.87°

**** EDIT 2 ***

By retaking measurements in the 40° angle configuration, I found out that the values are quite fluctuating even without modifying the set up or lightening. From time to time, I fall on the correct values:

||composedRvec||= sqrt(0.019^2+0.012^2+0.74^2)=0.74 rad = 42.4° which is quite accurate.

**** EDIT 3 ***

So here is my final code based on @Gilles-Philippe Paillé's edited answer:

import numpy as np

import cv2

import cv2.aruco as aruco

cap = cv2.VideoCapture(0, cv2.CAP_DSHOW) # Get the camera source

img_path='D:/your_path/'

# FILE_STORAGE_READ

cv_file = cv2.FileStorage(img_path+"camera.yml", cv2.FILE_STORAGE_READ)

matrix_coefficients = cv_file.getNode("K").mat()

distortion_coefficients = cv_file.getNode("D").mat()

nb_markers=2

def track(matrix_coefficients, distortion_coefficients):

while True:

ret, frame = cap.read()

# operations on the frame come here

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY) # Change grayscale

aruco_dict = aruco.custom_dictionary(nb_markers, 5)

parameters = aruco.DetectorParameters_create() # Marker detection parameters

# lists of ids and the corners beloning to each id

corners, ids, rejected_img_points = aruco.detectMarkers(gray,

aruco_dict,parameters=parameters,cameraMatrix=matrix_coefficients,distCoeff=distortion_coefficients)

# store rz1 and rz2

R_list=[]

if np.all(ids is not None): # If there are markers found by detector

for i in range(0, len(ids)): # Iterate in markers

# Estimate pose of each marker and return the values rvec and tvec---different from camera coefficients

rvec, tvec, markerPoints = aruco.estimatePoseSingleMarkers(corners[i], 0.02, matrix_coefficients,

distortion_coefficients)

(rvec - tvec).any() # get rid of that nasty numpy value array error

aruco.drawDetectedMarkers(frame, corners) # Draw A square around the markers

aruco.drawAxis(frame, matrix_coefficients, distortion_coefficients, rvec, tvec, 0.01) # Draw Axis

R, _ = cv2.Rodrigues(rvec)

# convert (np.matrix(R).T) matrix to array using np.squeeze(np.asarray()) to get rid off the ValueError: shapes (1,3) and (1,3) not aligned

R = np.squeeze(np.asarray(np.matrix(R).T))

R_list.append(R[2])

# Display the resulting frame

if len(R_list) == 2:

print('##############')

angle_radians = np.arccos(np.dot(R_list[0], R_list[1]))

angle_degrees=angle_radians*180/np.pi

print(angle_degrees)

cv2.imshow('frame', frame)

# Wait 3 milisecoonds for an interaction. Check the key and do the corresponding job.

key = cv2.waitKey(3000) & 0xFF

if key == ord('q'):

break

track(matrix_coefficients, distortion_coefficients)

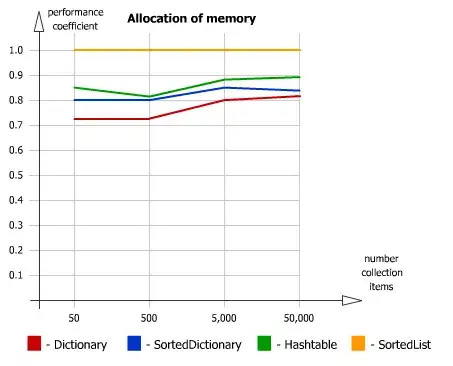

And here are some results:

red -> real angle, white -> measured angle

This is out of the scope of the question but I find that the pose estimation is quite fluctuating. For example when the 2 markers are against the wall, the values easely jump from 9° to 37° without touching the system.